Capabilities of Intel® AVX-512 in Intel® Xeon® Scalable Processors (Skylake)

This paper reviews the Intel® Advanced Vector Extensions 512 (Intel® AVX-512) instruction set and answers two critical questions:

- How do Intel® Xeon® Scalable processors based on the Skylake architecture (2017) compare to their predecessors based on Broadwell due to AVX-512?

- How are Intel Xeon processors based on Skylake different from their alternative, Intel® Xeon Phi™ processors with the Knights Landing architecture, which also feature AVX-512?

We address these questions from the programmer’s perspective by demonstrating C language code of microkernels benefitting from AVX-512. For each example, we dig deeper and analyze the compilation practices, resultant assembly, and optimization reports.

In addition to code studies, the paper contains performance measurements for a synthetic benchmark with guidelines on estimating peak performance. In conclusion, we outline the workloads and application domains that can benefit from the new features of AVX-512 instructions.

![]() Colfax-SKL-AVX512-Guide.pdf (524 KB)

Colfax-SKL-AVX512-Guide.pdf (524 KB)

Table of Contents

- 1. Intel Advanced Vector Extensions 512

- 1.1. Instruction Sets in Intel architecture

- 1.2. AVX-512 Modules

- 1.3. Automatic Vectorization

- 2. Skylake and Broadwell

- 2.1. AVX-512: ZMM Registers

- 2.2. Clock Frequency and Peak Performance

- 2.3. AVX-512CD: Conflict Detection

- 2.4. AVX-512F: Masking

- 2.5. AVX-512F: Compress/Expand

- 2.6. AVX-512F: New Shuffle Instructions

- 2.7. AVX-512F: Gather/Scatter

- 2.8. AVX-512F: Embedded Broadcasting

- 2.9. AVX-512F: Ternary Logic

- 2.10. AVX-512F: Embedded Rounding

- 3. Skylake and Knights Landing

- 3.1. AVX-512ER: Exponential, Reciprocal

- 3.2. AVX-512PF: Prefetch for Gather/Scatter

- 3.3. AVX-512DQ: Double and Quad Words

- 3.4. AVX-512BW: Byte and Word Support

- 3.5. AVX-512VL: Vector Length Orthogonality

- 4. Applicability of AVX-512

1. Intel Advanced Vector Extensions 512

1.1. Instruction Sets in Intel architecture

Vector instructions are an essential functionality of modern processors. These instructions are one of the forms of SIMD (Single Instruction Multiple Data) parallelism. Vector instructions enable faster computing in cases where a single stream of instructions inside a process or thread may be applied to multiple data elements. For example, the addition (multiplication) of two arrays element by element can be performed with vector instructions, which add (multiply) several elements concurrently. Other instructions in modern vector instruction sets include fused multiply-add (FMA), some transcendental functions, division and reciprocal calculation. Some instruction sets support vector lane masking, permutation, and shuffling. Depending on the data type and CPU architecture, modern Intel processors can operate on vectors of 2 to 16 floating-point numbers or 2 to 64 integers.

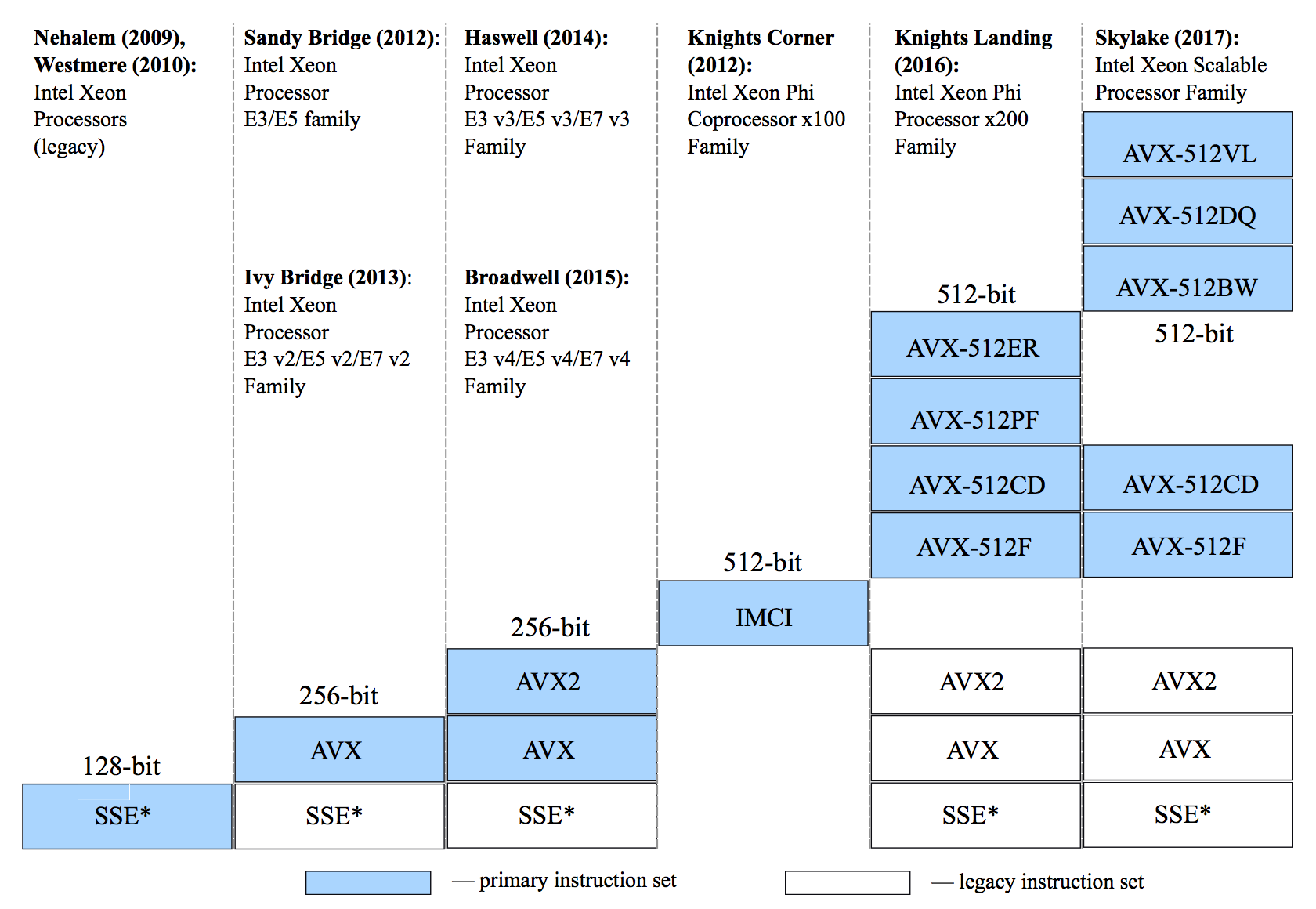

Intel architecture processors support legacy as well as modern instruction sets, from 64-bit Multimedia Extensions (MMX) to the new 512-bit instructions AVX-512. Newer instruction sets generally provide a greater variety of operations and data types and also support larger vectors. As new Intel architecture processors are released, they support newer instruction sets, usually maintaining backward compatibility with the older ones. The figure below shows the microarchitecture codenames, model families and supported instructions in enterprise-class Intel processors dating back to 2009.

Figure 1: Instruction sets supported by different architectures.

At the time of writing (September 2017), the most recent of the widely adopted enterprise processors use vector instructions up to Intel AVX® and Intel® AVX2. Specifically, these instructions are the foundation of the Intel® Xeon® E5 v4 processor family (formerly Broadwell) introduced in 2015. AVX and AVX2 had been the most advanced of Intel instruction sets until the introduction of Intel® Advanced Vector Extensions 512 (Intel® AVX-512 or just AVX-512 in subsequent discussion). AVX-512 as first used in Intel® Xeon Phi™ processor family x200 (formerly Knights Landing) launched in 2016. Later, in 2017, AVX-512 was used in Intel® Xeon® processor Scalable family (formerly Skylake).

The most notable new feature of AVX-512 compared to AVX/AVX2 is the 512-bit vector register width, which is twice the size of the AVX/AVX2 registers. However, AVX-512 is more than just a promotion of the vector register width from 256 to 512 bits. It adds capabilities that did not exist in AVX/AVX2 and earlier instruction sets, such as high-accuracy transcendental functions, histogram support, byte, word, double word and quadword scalar data types, masking, and others.

To developers and maintainers of computational codes, the introduction of AVX-512 is a call for action. AVX-512 instructions offer significant speedup when used in applications. However, this speedup is not automatic, and the software developer is responsible for enabling vector processing in computational codes. In this paper, we study the developer’s perspective on the innovations brought about by AVX-512.

1.2. AVX-512 Modules

Skylake and Knights Landing implement different flavors of AVX-512. The Knights Landing architecture uses modules AVX-512F, -CD, -ER and -PF. Two of these modules, AVX-512F and -CD are also found in the Skylake architecture, which additionally features AVX-512DQ, -BW and -VL. The table below shows AVX-512 modules found in Skylake (SKL) and Knights Landing (KNL) architectures and lists the main functionality of each module.

| Module | Functionality | SKL | KNL |

|---|---|---|---|

| AVX-512F | The fundamental instruction set, it expands most of AVX functions to support 512-bit registers and adds masking, embedded broadcasting, embedded rounding and exception control. | ✓ | ✓ |

| AVX-512CD | Conflict Detection instruction set allows vectorization of loops with vector dependency due to writing conflicts | ✓ | ✓ |

| AVX-512BW | Byte and Word support instruction set: 8-bit and 16-bit integer operations, processing up to 64 8-bit elements or 32 16-bit integer elements per vector | ✓ | |

| AVX-512DQ | Double and Quad word instruction set, supports new instructions for double-word (32-bit) and quadword (64-bit) integer and floating-point elements | ✓ | |

| AVX-512VL | Vector Length extensions: support for vector lengths smaller than 512 bits | ✓ | |

| AVX-512PF | Data prefetching for gather and scatter instructions | ✓ | |

| AVX-512ER | Exponential and Reciprocal instruction set for high-accuracy base-2 exponential functions, reciprocals, and reciprocal square root | ✓ |

Table 1: AVX-512 modules, functionality, and architectures supporting each module.

In anticipation of future Intel architectures, it is important to mention that not all AVX-512 modules are required to be supported by all architectures implementing them. AVX-512F, the Fundamental extensions, is the only module required to be supported by all future architectures implementing AVX-512.

1.3. Automatic Vectorization

There are two essential ways to take advantage of wide vector registers and vectorization benefits:

- explicit vectorization using assembly and intrinsic functions and

- automatic vectorization of loops by the compiler.

Although explicit vectorization is more controllable, it lacks portability. For instance, code using AVX-512 assembly or intrinsics will not work on an older processor that only supports instruction sets up to AVX2 (e.g., Broadwell architecture, BDW). Conversely, code using explicit AVX/AVX2 instructions will work on a Skylake or Knights Landing architecture, but in legacy mode, where it uses half the register width and incomplete functionality. In contrast, automatic vectorization is portable. If the compiler can vectorize a code for an older instruction set, then usually it can also vectorize it for newer instructions. Therefore, only a recompilation is needed to produce an executable for a new processor architecture.

The table below shows the compiler arguments required for automatic vectorization with AVX-512 using Intel C/C++/FORTRAN compilers 17/18 and the corresponding arguments for GNU and LLVM compilers.

| Compiler | Intel compilers 17.x | Intel compilers 18.x | GNU and LLVM |

|---|---|---|---|

| Cross-platform | -xCommon-AVX512 | -xCommon-AVX512 | -mfma -mavx512f -mavx512cd |

| SKL processors | -xCore-AVX512 | -xCore-AVX512 -qopt-zmm-usage=high | -march=skylake-avx512 |

| KNL processors | -xMIC-AVX512 | -xMIC-AVX512 | -march=knl |

Table 2: AVX-512 compilation flags for Intel C/C++, FORTRAN, GNU and LLVM compilers.

In Intel compilers, automatic vectorization is enabled at the default optimization level -O2, so no additional arguments are needed. In GCC, to enable automatic vectorization, use the additional argument -O3. Additionally, to vectorize transcendental functions with GCC, -ffast-math may be needed.

The listing below is an example of code that may be automatically vectorized by the compiler.

void VectorAddition(double * restrict A, double * restrict B) {

int i;

for(i = 0; i < 10000; i++)

A[i]+=B[i];

}Listing 1: Automatic vectorization example, auto_vec.c.

When the loop count (10000 in our case) is greater than the vector width, the compiler can combine several scalar iterations of the loop into a single vector iteration and run the loop over the vector iterations. With AVX-512, the vector width for double precision floating-point elements is 8, for single precision elements it is 16. When the loop count is not a multiple of the vector width, a remainder loop will be executed. Automatic vectorization does not require that the loop count is known at compilation time (i.e., we could use a variable n instead of the constant 10000). However, if it is known at compilation time, the compiler may choose a more efficient vectorization strategy.

The listing below shows how to compile the example code to produce an auto-vectorized object file (first command), assembly listing (second command), and optimization report (third command). Throughout the paper we use Intel compiler 17.0.2.174 and the corresponding arguments mentioned above.

icc -xCORE-AVX512 -c auto_vec.c

icc -xCORE-AVX512 -S auto_vec.c

icc -xCORE-AVX512 -c auto_vec.c -qopt-report=5 Listing 2: Compilation commands to produce an object file, an assembly listing, and an optimization report.

The first command line in the above listing will produce an object file auto_vec.o that can be linked to an executable and run on the target architecture.

The second command will produce an assembly listing in the file auto_vec.s instead of machine code. The snippet in the listing below shows the loop part of this assembly listing. You can identify the loop pattern by matching the label B1.8 to the combination of counter increment addq, loop termination condition check cmpq and conditional jump jb back to B1.8.

user@node% cat auto_vec.s

...

..B1.8: # Preds ..B1.8 ..B1.7

vmovups (%rdi,%r8,8), %zmm0 #4.5

vmovups 64(%rdi,%r8,8), %zmm1 #4.5

vmovups 128(%rdi,%r8,8), %zmm4 #4.5

vmovups 192(%rdi,%r8,8), %zmm5 #4.5

vaddpd (%rsi,%r8,8), %zmm0, %zmm2 #4.5

vaddpd 64(%rsi,%r8,8), %zmm1, %zmm3 #4.5

vaddpd 128(%rsi,%r8,8), %zmm4, %zmm6 #4.5

vaddpd 192(%rsi,%r8,8), %zmm5, %zmm7 #4.5

vmovupd %zmm2, (%rdi,%r8,8) #4.5

vmovupd %zmm3, 64(%rdi,%r8,8) #4.5

vmovupd %zmm6, 128(%rdi,%r8,8) #4.5

vmovupd %zmm7, 192(%rdi,%r8,8) #4.5

addq $32, %r8 #3.3

cmpq %rdx, %r8 #3.3

jb ..B1.8 # Prob 99% #3.3

... Listing 3: Lines from the auto-vectorized assembly code.

Vectorization is apparent in this code because the instruction vmovups loads the data from memory into the vector register, and vaddpd adds one vector to another. Here the prefix v- indicates the vector instruction, add is the name of the instruction, and the suffix -pd stands for “packed double”, i.e., the operation applies to a vector of double precision floating-point numbers. Other suffixes you may see include: -ps (“packed single”), -epi64/-epi32/-epi16/-epi8 for vectors of signed 64-, 32-, 16- or 8-bit integers; -epu64/-epu32/-epu16/-epu8 for vectors of unsigned integers; and suffixes indicating the absence of vectorization: -sd (“scalar double”) and -ss (“scalar single”). The names of the registers beginning with ZMM indicate 512-bit vectors of AVX-512. For other instruction sets, you may see 256-bit YMM registers (AVX/AVX2) or 128-bit XMM registers (SSE*).

In addition to studying the assembly, you can diagnose vectorization using the optimization report. To do that, compile the file with the argument -opt-report=n, where n is the desired verbosity level from 1 to 5. In our case, the command produces an optimization report file auto_vec.optrpt, in which you will find the remarks shown in the listing below.

user@node% cat auto_vec.optrpt

... LOOP BEGIN at auto_vec.c(3,3)

remark #15389: vectorization support: reference A[i] has unaligned access [ auto_vec.c(4,5) ]

remark #15389: vectorization support: reference A[i] has unaligned access [ auto_vec.c(4,5) ]

remark #15389: vectorization support: reference B[i] has unaligned access [ auto_vec.c(4,11) ]

remark #15381: vectorization support: unaligned access used inside loop body

remark #15305: vectorization support: vector length 8

remark #15309: vectorization support: normalized vectorization overhead 1.167

remark #15301: PEEL LOOP WAS VECTORIZED

remark #25015: Estimate of max trip count of loop=1

LOOP END

LOOP BEGIN at auto_vec.c(3,3)

remark #15388: vectorization support: reference A[i] has aligned access [ auto_vec.c(4,5) ]

remark #15388: vectorization support: reference A[i] has aligned access [ auto_vec.c(4,5) ]

remark #15389: vectorization support: reference B[i] has unaligned access [ auto_vec.c(4,11) ]

remark #15381: vectorization support: unaligned access used inside loop body

remark #15305: vectorization support: vector length 16

remark #15399: vectorization support: unroll factor set to 2

remark #15309: vectorization support: normalized vectorization overhead 0.591

remark #15300: LOOP WAS VECTORIZED

remark #15442: entire loop may be executed in remainder

remark #15448: unmasked aligned unit stride loads: 1

remark #15449: unmasked aligned unit stride stores: 1

remark #15450: unmasked unaligned unit stride loads: 1

remark #15475: --- begin vector cost summary ---

remark #15476: scalar cost: 8

remark #15477: vector cost: 0.680

remark #15478: estimated potential speedup: 11.550

remark #15488: --- end vector cost summary ---

remark #25015: Estimate of max trip count of loop=312

LOOP END

LOOP BEGIN at auto_vec.c(3,3)

remark #15389: vectorization support: reference A[i] has unaligned access [ auto_vec.c(4,5) ]

remark #15389: vectorization support: reference A[i] has unaligned access [ auto_vec.c(4,5) ]

remark #15389: vectorization support: reference B[i] has unaligned access [ auto_vec.c(4,11) ]

remark #15381: vectorization support: unaligned access used inside loop body

remark #15305: vectorization support: vector length 8

remark #15309: vectorization support: normalized vectorization overhead 1.167

remark #15301: REMAINDER LOOP WAS VECTORIZED

LOOP END

...Listing 4: Optimization report produced by the Intel C compiler.

In this particular example we see that the compiler produced three parts of the loop:

- A peel loop designed to process a few elements at the start of the loop to reach an aligned boundary in memory. This loop is taken only if the beginning of A is not on a 64-byte aligned boundary (i.e., memory address of A[0] is not a multiple of 64 bytes).

- The main loop that processes the bulk of the iterations, starting with the aligned address to which the peel loop has progressed.

- The remainder loop that processes a few elements at the end of the loop in case the number of loop iterations minus the peel size is not a multiple of the vector length (or the vector length multiplied by the unroll factor).

In codes that have long loops or short loops with aligned data, the peel loop and the remainder loop do not take a significant fraction of the processing time. Therefore, for our discussions in this paper, we will be only interested in the main loop.

When we produce the assembly with -S, we find it useful to use an additional compiler argument, -qopt-report-embed, which inserts remarks about vectorization into the assembly. In particular, it helps us to distinguish between the code for the peel loop, the main loop, and the remainder loop. You can see how they look in the listing below. We do not show these remarks in assembly listings in the rest of this paper, but we used them in the writing process to identify the main loop body.

user@node% icc -xCORE-AVX512 -S auto_vec.c -qopt-report-embed

user@node% cat auto_vec.s

..L3:

# optimization report

# LOOP WAS VECTORIZED

# PEELED LOOP FOR VECTORIZATION

# MASKED VECTORIZATION

# VECTORIZATION HAS UNALIGNED MEMORY REFERENCES

# VECTORIZATION SPEEDUP COEFFECIENT 1.641602

# VECTOR TRIP COUNT IS ESTIMATED CONSTANT

# VECTOR LENGTH 8

# NORMALIZED VECTORIZATION OVERHEAD 1.125000

# MAIN VECTOR TYPE: 64-bits floating point

vpcmpud $1, %ymm0, %ymm1, %k1 #3.3

addq $8, %rcx #3.3

vpaddd %ymm2, %ymm1, %ymm1 #3.3

vmovupd (%rdx,%rdi), %zmm3{%k1}{z} #4.5

...

..L4:

# optimization report

# LOOP WAS UNROLLED BY 2

# LOOP WAS VECTORIZED

# VECTORIZATION HAS UNALIGNED MEMORY REFERENCES

# VECTORIZATION SPEEDUP COEFFECIENT 11.562500

# VECTOR TRIP COUNT IS KNOWN CONSTANT

# VECTOR LENGTH 16

# NORMALIZED VECTORIZATION OVERHEAD 0.562500

# MAIN VECTOR TYPE: 64-bits floating point

vmovups (%rdi,%r8,8), %zmm0 #4.5

vmovups 64(%rdi,%r8,8), %zmm1 #4.5

vmovups 128(%rdi,%r8,8), %zmm4 #4.5

...

..L5:

# optimization report

# LOOP WAS VECTORIZED

# REMAINDER LOOP FOR VECTORIZATION

# MASKED VECTORIZATION

# VECTORIZATION HAS UNALIGNED MEMORY REFERENCES

# VECTORIZATION SPEEDUP COEFFECIENT 3.550781

# VECTOR TRIP COUNT IS ESTIMATED CONSTANT

# VECTOR LENGTH 8

# NORMALIZED VECTORIZATION OVERHEAD 1.125000

# MAIN VECTOR TYPE: 64-bits floating point

vpcmpud $1, %ymm0, %ymm1, %k1 #3.3

lea (%rax,%rcx), %r8d #4.5

vpaddd %ymm2, %ymm1, %ymm1 #3.3

movslq %r8d, %r8 #4.5

...Listing 5: Remarks in the assembly listing produced by the Intel compiler with -qopt-report-embed.

With the GNU C compiler, the equivalent compilation commands are shown in the listing below.

gcc -O3 -march=skylake-avx512 -c auto_vec.c

gcc -O3 -march=skylake-avx512 -S auto_vec.c

gcc -O3 -march=skylake-avx5122 -c auto_vec.c -fopt-info-vecListing 6: Compilation commands for GCC to produce executable code and assembly listing.

The assembly produced by GCC 6.3.0 (see the listing below) also contains vectorized addition. However, you can see that GCC has decided not to unroll the loop, possibly leading to a less efficient implementation.

.L4:

vmovupd (%r8,%rax), %zmm0

vaddpd (%rcx,%rax), %zmm0, %zmm0

addl $1, %edx

vmovapd %zmm0, (%rcx,%rax)

addq $64, %rax

cmpl %r9d, %edx

jb .L4Listing 7: Assembly of vector addition produced by GCC.

The optimization report produced by GCC with -fopt-info-vec is much less informative than Intel compiler’s report, as shown in the listing below.

user@node% gcc -O3 -march=skylake-avx512 -S auto_vec.c -fopt-info-vec

auto_vec.c:3:3: note: loop vectorized

auto_vec.c:3:3: note: loop peeled for vectorization to enhance alignmentListing 8: Optimization report of GCC.

It is possible to obtain more information with -fopt-info-vec-all, or restrict the output to failed vectorization with -fopt-info-vec-missed. However, the output of GCC with these arguments in our simple case is not as informative and as easy to read as the Intel compiler’s report. Note that the previously used argument for vectorization diagnostics, -ftree-vectorizer-verbose=n, is deprecated in version 6.3.0 of GCC that we used for the materials of this paper.

Using the correct compiler arguments for automatic vectorization is only one of the instruments in the programmer’s toolbox. In some cases, the programmer must expose vectorization opportunities, adjust data containers, guide the compiler with directives, rebalance parallelism in the application, or optimize the data traffic to get vectorization working efficiently. These techniques go beyond the scope of this paper, and we can refer you to our free training programs for more details (e.g., HOW Series “Deep Dive” or HOW Series “KNL”).

2. Skylake and Broadwell

As we mentioned above, Intel Xeon processor Scalable family based on the Skylake (SKL) microarchitecture is the first Intel Xeon product line to support AVX-512. These processors succeed the Intel Xeon processor v4 family based on the Broadwell (BDW) microarchitecture, which supports instructions from MMX and SSE to AVX and AVX2.

Section 2 explains and illustrates with examples the differences in vectorization capabilities of the Skylake and Broadwell architectures.

2.1. AVX-512: ZMM Registers

Skylake cores support 512-bit registers while Broadwell cores use 256-bit vectors. Some instructions have the same throughput (sustained number of pipelined instructions processed per cycle) on SKL and BDW. For example, both BDW and SKL have a throughput for FMA of 2 instructions per cycle. These considerations may lead to the erroneous conclusion that by just changing the width of vector iterations in the code (or the names of vector registers in the assembly), we can port a code from BDW to SKL and observe a speedup by a factor of 2 (relative to clock frequency). In reality, the porting process has several complications:

- SKL cores want to consume 2x as much data per cycle as BDW cores. However, the bandwidth of SKL caches and memory is only slightly greater than on BDW. The difference between the amount of improvement in throughput and in bandwidth means that data reuse optimizations (such as loop tiling) must be adjusted before peak performance on SKL may be observed.

- The latency of fast instructions, such as FMA, is different. On BDW, FMA from AVX2 has a latency of 5 cycles, while on SKL the latency of FMA from AVX-512 is 4 cycles (on KNL, it is 6 cycles). Therefore, more FMA instructions must be pipelined on BDW than on SKL to observe the effective throughput of 2 instructions per cycle.

- The number of available registers is different. Each SKL core supports 32 vector registers, while BDW cores have 16 registers. Additional registers expand the opportunities for pipelining and data reuse in registers on SKL.

The example in the listing below shows a benchmark of the fused multiply-add (FMA) operation.

#include <stdio.h>

#include <omp.h>

const int n_trials = 1000000000; // Enough to keep cores busy for a while and observe a steady state

const int flops_per_calc = 2; // Multiply + add = 2 instructions

const int n_chained_fmas = 10; // Must be tuned for architectures here and in blocks (R) and in (E)

int main() {

#pragma omp parallel

{ } // Warm up the threads

const double t0 = omp_get_wtime(); // start timer

#pragma omp parallel

{ // Benchmark in all threads

double fa[VECTOR_WIDTH*n_chained_fmas], fb[VECTOR_WIDTH], fc[VECTOR_WIDTH];

fa[0:VECTOR_WIDTH*n_chained_fmas] = 0.0; // prototype of a memory-based array

fb[0:VECTOR_WIDTH] = 0.5; // fixed

fc[0:VECTOR_WIDTH] = 1.0; // fixed

register double *fa01 = fa + 0*VECTOR_WIDTH; // This is block (R)

register double *fa02 = fa + 1*VECTOR_WIDTH; // To tune for a specific architecture,

register double *fa03 = fa + 2*VECTOR_WIDTH; // more or fewer fa* variables

register double *fa04 = fa + 3*VECTOR_WIDTH; // must be used

register double *fa05 = fa + 4*VECTOR_WIDTH;

register double *fa06 = fa + 5*VECTOR_WIDTH;

register double *fa07 = fa + 6*VECTOR_WIDTH;

register double *fa08 = fa + 7*VECTOR_WIDTH;

register double *fa09 = fa + 8*VECTOR_WIDTH;

register double *fa10 = fa + 9*VECTOR_WIDTH;

int i, j;

#pragma nounroll // Prevents automatic unrolling by compiler to avoid skewed benchmarks

for(i = 0; i < n_trials; i++)

#pragma omp simd // Ensures that vectorization does occur

for (j = 0; j < VECTOR_WIDTH; j++) { // VECTOR_WIDTH=4 for AVX2, =8 for AVX-512

fa01[j] = fa01[j]*fb[j] + fc[j]; // This is block (E)

fa02[j] = fa02[j]*fb[j] + fc[j]; // To tune for a specific architecture,

fa03[j] = fa03[j]*fb[j] + fc[j]; // more or fewer such FMA constructs

fa04[j] = fa04[j]*fb[j] + fc[j]; // must be used

fa05[j] = fa05[j]*fb[j] + fc[j];

fa06[j] = fa06[j]*fb[j] + fc[j];

fa07[j] = fa07[j]*fb[j] + fc[j];

fa08[j] = fa08[j]*fb[j] + fc[j];

fa09[j] = fa09[j]*fb[j] + fc[j];

fa10[j] = fa10[j]*fb[j] + fc[j];

}

fa[0:VECTOR_WIDTH*n_chained_fmas] *= 2.0; // Prevent dead code elimination

}

const double t1 = omp_get_wtime();

const double gflops = 1.0e-9*(double)VECTOR_WIDTH*(double)n_trials*(double)flops_per_calc*

(double)omp_get_max_threads()*(double)n_chained_fmas;

printf("Chained FMAs=%d, vector width=%d, GFLOPs=%.1f, time=%.6f s, performance=%.1f GFLOP/s\n",

n_chained_fmas, VECTOR_WIDTH, gflops, t1 - t0, gflops/(t1 - t0));

}Listing 9: Fused multiply-add (FMA) benchmark with 10 chained FMAs.

This code is written in a way that the dataset can be contained completely in the registers. Therefore, it is a pure test of performance. The code issues several FMA instructions in a row, which are independent (do not have to wait for each other’s results). Multiple instructions are needed to populate the pipeline with sufficient amount of work, which will help to achieve the performance limited by the FMA instruction throughput, rather than latency. Therefore, n_chained_fmas must be chosen to be no less than the ratio of the FMA latency (in cycles) to its reciprocal throughput (cycles per instruction). At the same time, this value must be small enough that all of the fa* variables can be contained within the registers of one core. Correspondingly, lines must be added or removed in blocks (R) and (E).

The dependence of the optimal number of chained FMAs on the architecture means that to tune the code for Broadwell or Skylake, slightly different codes must be used for the two architectures. That is, of course, in addition to using different compilation arguments for automatic vectorization.

We can estimate the optimal range for n_chained_fmas for both architectures.

- For Broadwell, FMA has a latency of 5 cycles and reciprocal throughput of 0.5 cycle (i.e., 2 instructions per cycle), so the number of chained FMAs must be no less than 5/0.5=10. At the same time, with 16 registers per core, and two of them occupied by fb and fc, we can only use up to 16-2=14 chained FMAs. So n_chained_fmas must be between 10 and 14, inclusive.

- For Skylake, FMA has a latency of 4 cycles and reciprocal throughput of 0.5 cycle (i.e., 2 instructions per cycle), so the number of chained FMAs must be no less than 4/0.5=8. At the same time, with 32 registers per core, and two of them occupied by fb and fc, we can only use up to 32-2=30 chained FMAs. So n_chained_fmas must be between 8 and 30, inclusive, which is a much wider range than for Broadwell.

To compile the code into assembly for the respective architectures, we used the commands shown in the listing below. As you can see, we are compiling different source files: for Broadwell, fma_10.c has n_chained_fmas=10, and for Skylake, fma_08.c has n_chained_fmas=8.

icpc -qopenmp -DVECTOR_WIDTH=4 -xCORE-AVX2 -S -o fma_avx2_10.s fma_10.c

icpc -qopenmp -DVECTOR_WIDTH=8 -xCORE-AVX512 -S -o fma_avx512_08.s fma_08.cListing 10: Compilation of the fused multiply-add benchmark code for Broadwell and Skylake.

The generated assembly for Broadwell looks like in the listing below. You can see the usage of vfmadd213pd with two constant registers YMM0 and YMM7, and a set of registers YMM1 … YMM6 and YMM8 … YMM11 that serve as input as well as output. YMM are 256-bit wide AVX2 registers, each containing four double precision numbers.

..B1.24: # Preds ..B1.24 ..B1.23

# Execution count [1.00e+06]

incl %eax #35.5

vfmadd213pd %ymm0, %ymm7, %ymm11 #38.35

vfmadd213pd %ymm0, %ymm7, %ymm10 #39.35

vfmadd213pd %ymm0, %ymm7, %ymm9 #40.35

vfmadd213pd %ymm0, %ymm7, %ymm8 #41.35

vfmadd213pd %ymm0, %ymm7, %ymm2 #42.35

vfmadd213pd %ymm0, %ymm7, %ymm3 #43.35

vfmadd213pd %ymm0, %ymm7, %ymm4 #44.35

vfmadd213pd %ymm0, %ymm7, %ymm5 #45.35

vfmadd213pd %ymm0, %ymm7, %ymm6 #46.35

vfmadd213pd %ymm0, %ymm7, %ymm1 #47.35

cmpl $1000000000, %eax #35.5

jb ..B1.24 # Prob 99% #35.5Listing 11: Lines from Fused Multiply Add example assembly code on Broadwell.

The generated assembly for Skylake looks like in the listing below. It also uses the vfmadd213pd instruction, but ZMM are the 512-bit AVX-512 registers. Therefore, every instruction processes eight double precision numbers.

..B1.24: # Preds ..B1.24 ..B1.23

# Execution count [1.00e+06]

incl %eax #33.5

vfmadd213pd %zmm0, %zmm1, %zmm9 #36.35

vfmadd213pd %zmm0, %zmm1, %zmm8 #37.35

vfmadd213pd %zmm0, %zmm1, %zmm7 #38.35

vfmadd213pd %zmm0, %zmm1, %zmm6 #39.35

vfmadd213pd %zmm0, %zmm1, %zmm5 #40.35

vfmadd213pd %zmm0, %zmm1, %zmm4 #41.35

vfmadd213pd %zmm0, %zmm1, %zmm3 #42.35

vfmadd213pd %zmm0, %zmm1, %zmm2 #43.35

cmpl $1000000000, %eax #33.5

jb ..B1.24 # Prob 99% #33.5Listing 12: Lines from Fused Multiply Add example assembly code on Skylake.

We measured the performance of these codes on two systems:

- Intel Xeon processor 2699 v4 (Broadwell) with 2 sockets, each socket with 22 cores at 2.2 GHz and

- Intel Xeon Platinum 8160 processor (Skylake) with 2 sockets, each socket with 24 cores at 2.1 GHz.

On both systems we turned off the Intel® Turbo Boost technology by disabling the intel_pstate driver via a kernel argument (to fall back to the acpi-cpufreq driver) and setting the CPUfreq governor “userspace” combined with a fixed clock frequency of 2.2~GHz on Broadwell and 2.1~GHz on Skylake.

Our measurements were taken for a set of codes with the number of chained FMAs ranging from 1 (no chaining) to 35 (too many to fit even in the Skylake register file). We set the environment variable KMP_HW_SUBSET=1t, which results in 44 OpenMP threads on Broadwell and 48 threads on Skylake, and KMP_AFFINITY=compact,1, which places one thread on each physical core.

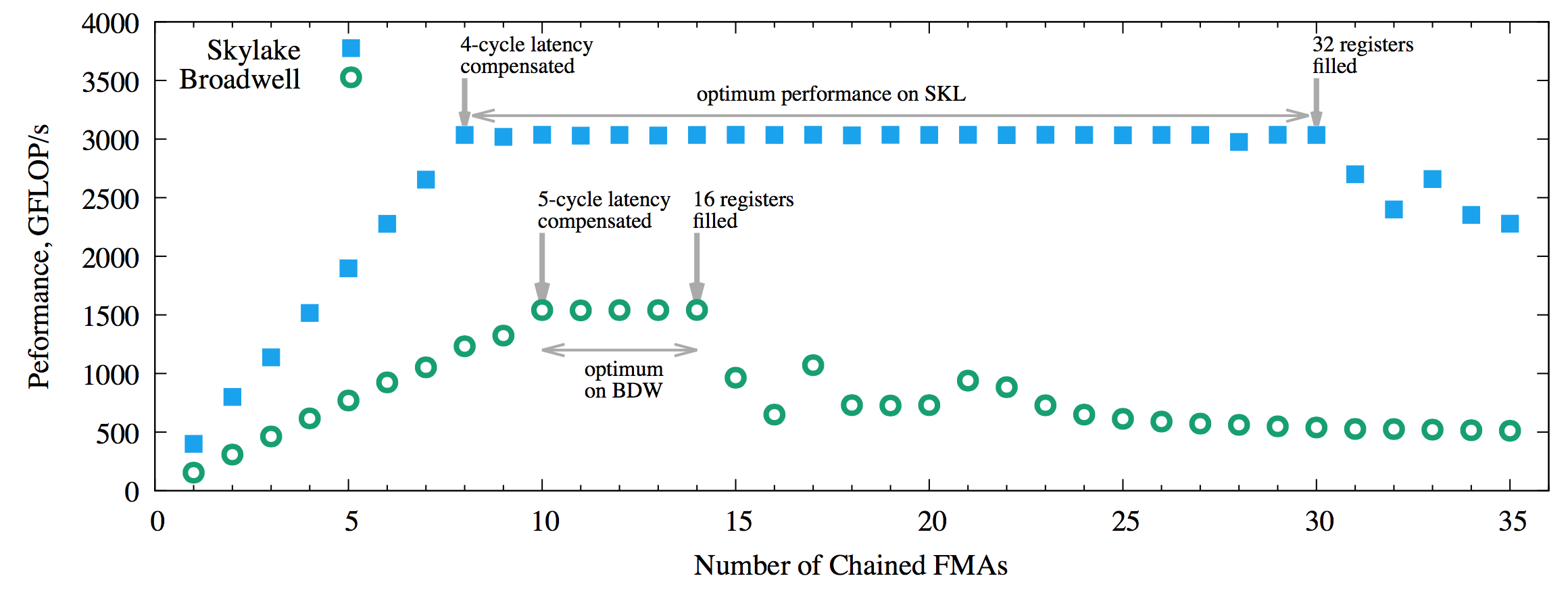

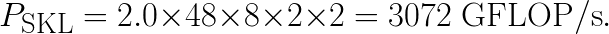

The results are shown in the figure below.

Figure 2: Performance of the FMA benchmark on Broadwell and Skylake architecture processors with 1 thread/core.

We observe the optimum performance on Broadwell for the number of chained FMAs between 10 and 14. This range is consistent with our theoretical predictions. The best performance that we measured is 1540 GFLOP/s.

Skylake achieves the optimum performance for the number of chained FMAs between 8 and 30. This range is also in agreement with our theoretical expectations. The best performance on Skylake is 3030 GFLOP/s.

The range of well-performing values for n_chained_fmas is much wider on Skylake than on Broadwell due to a lower latency of FMA and a larger register file on SKL. This fact is important for performance tuning strategy: instead of trying to contain the traffic of reusable data in caches, you can have better results by reusing data in the register file.

In cases when you do not have enough independent instructions to populate the pipeline, you can use the Intel® Hyper-Threading technology. This technology allows each core in the CPU to present itself as two (for Intel Xeon processors) or four (for Intel Xeon Phi processors) logical processors, each of which is designed to execute a single thread. The instruction decode unit in the core can pipeline instructions from all logical processors in a core. Therefore, with two threads per core on Intel Xeon Processors or two to four on Intel Xeon Phi processors, you can achieve the plateau of peak performance with fewer chained instructions. The same approach can be used to mask the latency of memory accesses when fully containing memory traffic to caches or registers is not possible or practical.

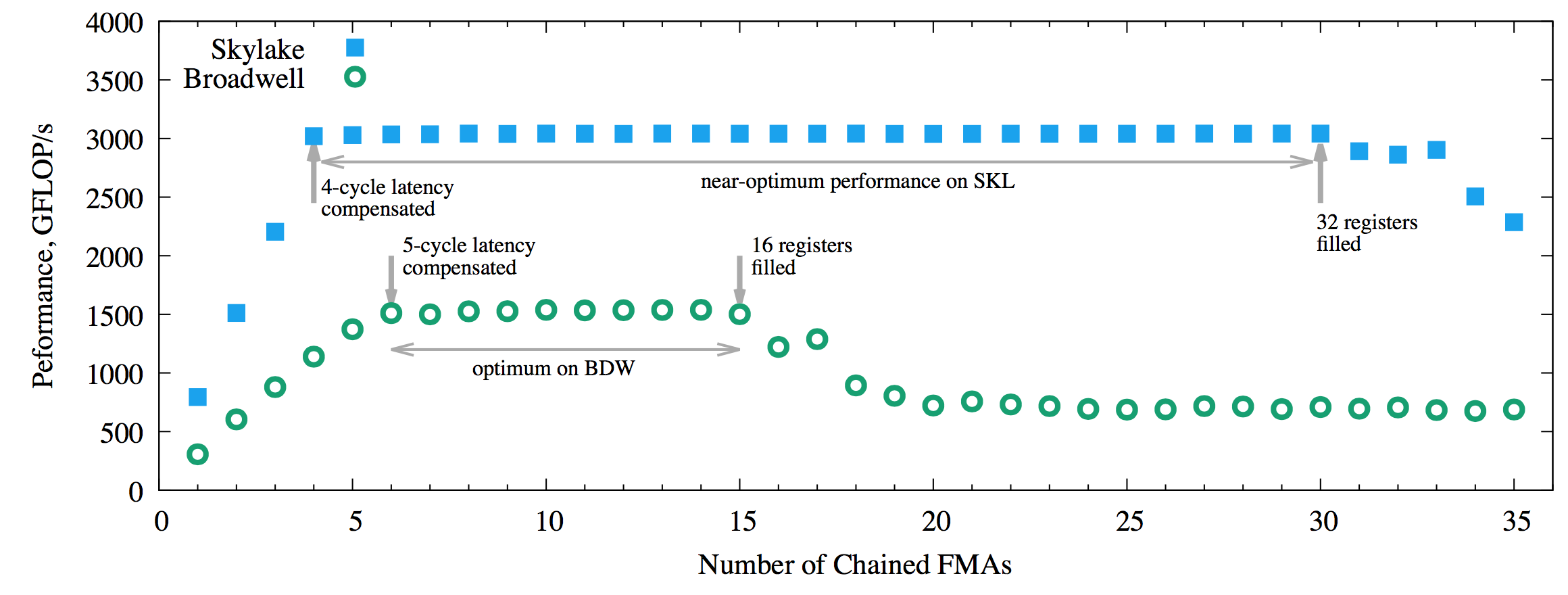

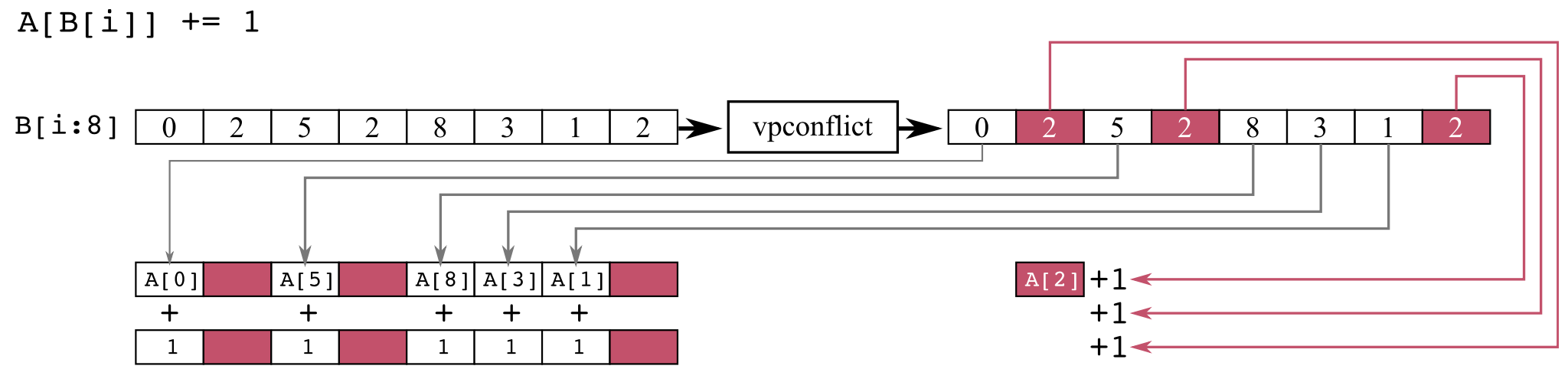

The figure below shows the performance benchmark of FMA, this time measured with two threads per core by setting KMP_HW_SUBSET=2t, which results in 88 threads on Skylake and 96 on Broadwell.

Figure 3: Performance of the FMA benchmark on Broadwell and Skylake architecture processors with 2 threads/core.

In this case, we can see the plateau achieved with just 4 and 6 chained FMAs on Skylake and Broadwell, respectively. However, the level of the plateau on Skylake stays around 10% lower than the optimum until the number of chained FMAs reaches 16. We attribute this effect to the overhead of the hyper-threading technology.

2.2. Clock Frequency and Peak Performance

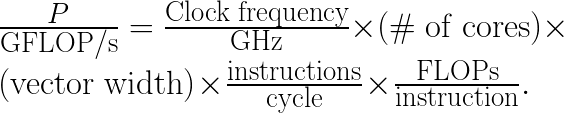

Now we should estimate the theoretical peak performance to make sure that the measured numbers make sense. The peak performance for a single instruction (we will denote it as P) can be estimated as

In the case of FMA, FLOPs/instruction=2 by definition, and the throughput instructions/cycle=2 in both our systems. So for our Broadwell CPU (Intel Xeon processor E5-2699 v4),

For the Skylake CPU (Intel Xeon Platinum processor 8160), the base frequency listed in the CPU specifications is 2.1 GHz. However, this frequency is applicable only to non-AVX workloads. For workloads heavy in AVX-512, like our benchmark, the CPU reduces the clock frequency. According to Intel Xeon processor Scalable family specification, page 15, when all cores are heavily utilized with AVX-512, the processor clocks down to 2.0 GHz, making

These peak performance estimates are only marginally higher than our measurements for Broadwell and Skylake, 1540 and 3030 GFLOP/s, respectively. Quantitatively, our efficiency in both cases is 99%.

At the same time, both processors are capable of frequency scaling thanks to the Intel Turbo Boost technology. The maximum turbo frequency for Intel Xeon processor E5-2699 v4 is 3.6 GHz and for Intel Xeon Platinum processor 8160 it is equal to 3.7 GHz. If the Intel Turbo Boost technology is enabled in the BIOS, and the CPUfreq governor in the Linux kernel allows frequency scaling, the runtime frequency may be greater than the base clock frequency, up to the maximum Turbo frequency. We ran the benchmark without modification on the Broadwell processor with enabled frequency scaling, and in the optimum case, the cores settled into a clock frequency of 2.6 GHz and delivered a maximum performance of 1940 GFLOP/s, which is 26% greater than the measurement without frequency scaling. On Skylake, cores settled into a clock frequency of 2.0 GHz, producing the nearly same performance as without frequency scaling, 3040~GFLOP/s.

We will not discuss clock frequencies further in this publication, as this subject is only indirectly related to the changes in the instruction set. We may cover this topic in a different paper.

Finally, regarding the throughput of FMA, it is important to mention that our Skylake processor delivered a throughput of two FMA instructions per cycle because it had two FMA units ported to port 0 and 5. Some other processors based on the Skylake architecture may have a single FMA unit located on port 0. The lack of a second FMA unit reduces the throughput expectation to one instruction per cycle. More details can be found in Section 13.20 of the Intel® 64 and IA-32 Architectures Optimization Reference Manual.

2.3. AVX-512CD: Conflict Detection

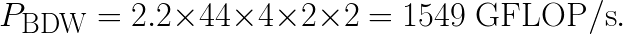

The conflict detection instruction introduced in AVX-512CD operates on a vector of memory addresses or integers. It returns the set of vector lanes that contain elements equal to any other elements in this vector. This instruction, along with a few other support instructions of AVX-512CD, allows the vectorization of codes with indirect memory accesses leading to write conflicts. Binning (histogram data computation) is an example of such workloads. Binning was not fully vectorizable in earlier instruction sets (see, e.g., this paper).

The figure below illustrates the workflow of the microkernel A[B[i]]+=1, where i ranges from 0 to n-1.

Figure 4: Histogram data computation with conflict detection

For older instruction sets (e.g., AVX/AVX2), this kernel must be executed sequentially, i.e., one value of i per instruction. Indeed, if the offset B[i] has the same value for different values of i, then the corresponding values of A must be incremented several times. In contrast, for AVX-512CD, Intel compilers implement a procedure that consists of three steps. First, the code loads a block of values from B in a vector register, and a conflict detection instruction identifies vector lanes in which the values (offsets) are identical, i.e., in conflict. Second, the code increments the values of A corresponding to non-conflicting values of i in parallel with a vector addition instruction. Third, the code sequentially increments the values of A corresponding to conflicting values of i. In the last step, sequential execution is necessary to produce correct results. However, in the second step, parallelism is acceptable, and vectorization of this step may yield performance gains compared to sequential execution, especially if the frequency of conflicts is low.

Let’s study this new functionality with an example. The for-loop in the listing below is an example of a vector dependence due to the possible writing conflict in adjacent iterations, which makes it impossible to vectorize on the Broadwell microarchitecture.

const int ARR_SIZE=8000;

int main() {

float A[ARR_SIZE],C[ARR_SIZE];

int B[ARR_SIZE];

int i;

for(i = 0; i < ARR_SIZE; i++)

A[B[i]] += 1.0f/C[i];

}Listing 13: Loop with conflicts and vector dependencies.

To compile the example for Broadwell, use command lines in the listing below with -xCORE-AVX2.

icc CD.c -xCORE-AVX2 -O3 -qopt-report=5 -fp-model fast=2 -SListing 14: Compilation lines for conflict detection example (Broadwell).

This command produces an optimization report and assembly file. In the case of Broadwell, according to the optimization report in the listing below, the loop was not vectorized due to a vector dependence (i.e., operations a block of values of i cannot be performed in parallel using vector instructions).

LOOP BEGIN at CD.c(9,3)

remark #15344: loop was not vectorized: vector dependence prevents vectorization

remark #15346: vector dependence: assumed FLOW dependence between A[B[i]] (10:5) and A[B[i]] (10:5)

remark #15346: vector dependence: assumed ANTI dependence between A[B[i]] (10:5) and A[B[i]] (10:5)

remark #25438: unrolled without remainder by 4

remark #25456: Number of Array Refs Scalar Replaced In Loop: 4

LOOP ENDListing 15: Optimization report for conflict detection example on Broadwell.

The associated assembly code in the listing below shows that the loop was not vectorized, and it was implemented on the lower 128 bits (XMM) of the vector registers.

..B1.2: # Preds ..B1.2 ..B1.6

# Execution count [2.00e+03]

vdivss 64000(%rsp,%rax), %xmm0, %xmm1 #10.21

vdivss 64004(%rsp,%rax), %xmm0, %xmm3 #10.21

vdivss 64008(%rsp,%rax), %xmm0, %xmm5 #10.21

vdivss 64012(%rsp,%rax), %xmm0, %xmm7 #10.21

movslq 32000(%rsp,%rax), %rcx #10.7

incl %edx #9.3

movslq 32004(%rsp,%rax), %rdi #10.7

movslq 32008(%rsp,%rax), %r9 #10.7

movslq 32012(%rsp,%rax), %r10 #10.7

lea (,%rcx,4), %rsi #10.5

addq $16, %rax #9.3

lea (,%rdi,4), %r8 #10.5

vaddss (%rsp,%rcx,4), %xmm1, %xmm2 #10.5

vmovss %xmm2, (%rsp,%rsi) #10.5

vaddss (%rsp,%rdi,4), %xmm3, %xmm4 #10.5

vmovss %xmm4, (%rsp,%r8) #10.5

vaddss (%rsp,%r9,4), %xmm5, %xmm6 #10.5

vmovss %xmm6, (%rsp,%r9,4) #10.5

vaddss (%rsp,%r10,4), %xmm7, %xmm8 #10.5

vmovss %xmm8, (%rsp,%r10,4) #10.5

cmpl $2000, %edx #9.3

jb ..B1.2 # Prob 99% #9.3

# LOE rax rbx r12 r13 r14 r15 edx xmm0Listing 16: Lines from assembly code for compiling conflict detection example on Broadwell.

To compile the code for Skylake, use the command in the listing below with -xCORE-AVX512.

icc CD.c -xCORE-AVX512 -O3 -qopt-report=5 -fp-model fast=2 -SListing 17: Compilation lines for conflict detection example (Skylake).

In the case of Skylake, the optimization report in the listing below shows that the loop was vectorized and that the vector dependencies were resolved by recognizing a histogram calculation pattern.

LOOP BEGIN at CD.c(9,3)

remark #15388: vectorization support: reference B[i] has aligned access [ CD.c(10,7) ]

remark #15388: vectorization support: reference B[i] has aligned access [ CD.c(10,7) ]

remark #15388: vectorization support: reference C[i] has aligned access [ CD.c(10,21) ]

remark #15416: vectorization support: irregularly indexed store was generated for the variable <A[B[i]]>, part of index is read from memory [ CD.c(10,5) ]

remark #15415: vectorization support: irregularly indexed load was generated for the variable <A[B[i]]>, part of index is read from memory [ CD.c(10,5) ]

remark #15305: vectorization support: vector length 16

remark #15300: LOOP WAS VECTORIZED

remark #15448: unmasked aligned unit stride loads: 2

remark #15462: unmasked indexed (or gather) loads: 1

remark #15463: unmasked indexed (or scatter) stores: 1

remark #15475: --- begin vector cost summary ---

remark #15476: scalar cost: 36

remark #15477: vector cost: 16.810

remark #15478: estimated potential speedup: 2.140

remark #15486: divides: 1

remark #15488: --- end vector cost summary ---

remark #15499: histogram: 2

remark #25015: Estimate of max trip count of loop=500

LOOP BEGIN at

remark #25460: No loop optimizations reported

LOOP END

LOOP ENDListing 18: Optimization report for conflict detection example on Skylake.

According to the optimization report, the potential speedup of the loop vectorization on Skylake is 2.14 compared to scalar code. Note that speedups in the optimization report are estimated relative to scalar code on the same architecture and cannot be used to compare the estimated performance between architectures.

In the listing below we show the assembly for the Skylake code.

..B1.2: # Preds ..B1.6 ..B1.10

# Execution count [0.00e+00]

vmovups 32064(%rsp,%rcx,4), %zmm2 #10.21

vrcp14ps %zmm2, %zmm1 #10.21

vmulps %zmm2, %zmm1, %zmm2 #10.21

vaddps %zmm1, %zmm1, %zmm4 #10.21

vfnmadd213ps %zmm4, %zmm1, %zmm2 #10.21

vmovups 64064(%rsp,%rcx,4), %zmm4 #10.7

kmovw %k1, %k2 #10.5

vpconflictd %zmm4, %zmm1 #10.5

vptestmd .L_2il0floatpacket.1(%rip), %zmm1, %k0 #10.5

kmovw %k0, %eax #10.5

vpxord %zmm0, %zmm0, %zmm0 #10.5

vgatherdps (%rdx,%zmm4,4), %zmm0{%k2} #10.5

vaddps %zmm2, %zmm0, %zmm3 #10.5

testl %eax, %eax #10.5

je ..B1.6 # Prob 30% #10.5

# LOE rdx rcx rbx r12 r13 r14 r15 zmm1 zmm2 zmm3 zmm4 k1

..B1.3: # Preds ..B1.2

# Execution count [0.00e+00]

vpbroadcastmw2d %k1, %zmm6 #10.5

vplzcntd %zmm1, %zmm5 #10.5

vmovups .L_2il0floatpacket.2(%rip), %zmm0 #10.5

vptestmd %zmm1, %zmm6, %k0 #10.5

vpsubd %zmm5, %zmm0, %zmm0 #10.5

kmovw %k0, %eax #10.5

# LOE rdx rcx rbx r12 r13 r14 r15 eax zmm0 zmm1 zmm2 zmm3 zmm4 k1

..B1.4: # Preds ..B1.4 ..B1.3

# Execution count [0.00e+00]

kmovw %eax, %k2 #10.5

vpbroadcastmw2d %k2, %zmm5 #10.5

vpermps %zmm3, %zmm0, %zmm3{%k2} #10.5

vptestmd %zmm1, %zmm5, %k0{%k2} #10.5

vaddps %zmm2, %zmm3, %zmm3{%k2} #10.5

kmovw %k0, %eax #10.5

testl %eax, %eax #10.5

jne ..B1.4 # Prob 70% #10.5

# LOE rdx rcx rbx r12 r13 r14 r15 eax zmm0 zmm1 zmm2 zmm3 zmm4 k1

..B1.6: # Preds ..B1.4 ..B1.2

# Execution count [8.00e+03]

addq $16, %rcx #9.3

kmovw %k1, %k2 #10.5

vscatterdps %zmm3, (%rdx,%zmm4,4){%k2} #10.5

cmpq $8000, %rcx #9.3

jb ..B1.2 # Prob 99% #9.3

# LOE rdx rcx rbx r12 r13 r14 r15 k1Listing 19: Lines from assembly code for compiling conflict detection example on Skylake.

In block B1.2, the vpconflictd instruction compares each element of the vector register with all previous elements in that register and outputs the results of all comparisons. If there are no conflicts, the code jumps to block B1.6, where the resulting vector is scattered into array A. Otherwise (i.e., in case of conflicts), the code makes preparation work in B1.3 and sets up a loop in B1.4 over the conflicted vector lanes. After that, in B1.6, the resulting vector with the correctly incremented vector lanes is written back to memory.

2.4. AVX-512F: Masking

Masking in AVX-512 is supported by a set of eight 64-bit opmask registers. Masking registers conditionally control computational operations and updates of the destination vector with per-element granularity. The purpose of masked operations is vectorization of loops with if-statements.

The AVX-512 masking support is an improvement over AVX2. Masking in AVX2 uses one of the 256-bit YMM vector registers to hold the mask. AVX2 load, store, gather and blend operations accept the mask register explicitly, and masked memory access may be sufficient for simple cases. Other AVX2 instructions do not explicitly support masking, but in more complex cases, the compiler can emulate masking in other instructions by performing unmasked arithmetic followed by bitwise “and” with the mask register. These operations must be followed by a blend instruction. In contrast, AVX-512 masking uses dedicated bitmask registers, which do not occupy ZMM space. Most AVX-512 instructions accept a mask explicitly, so following up with bitwise “and” is not neccessary. Additionally, AVX-512 masking is enabled for 128, 256 and 512-bit vector length, and supports all data types: byte, word, double and quad-word.

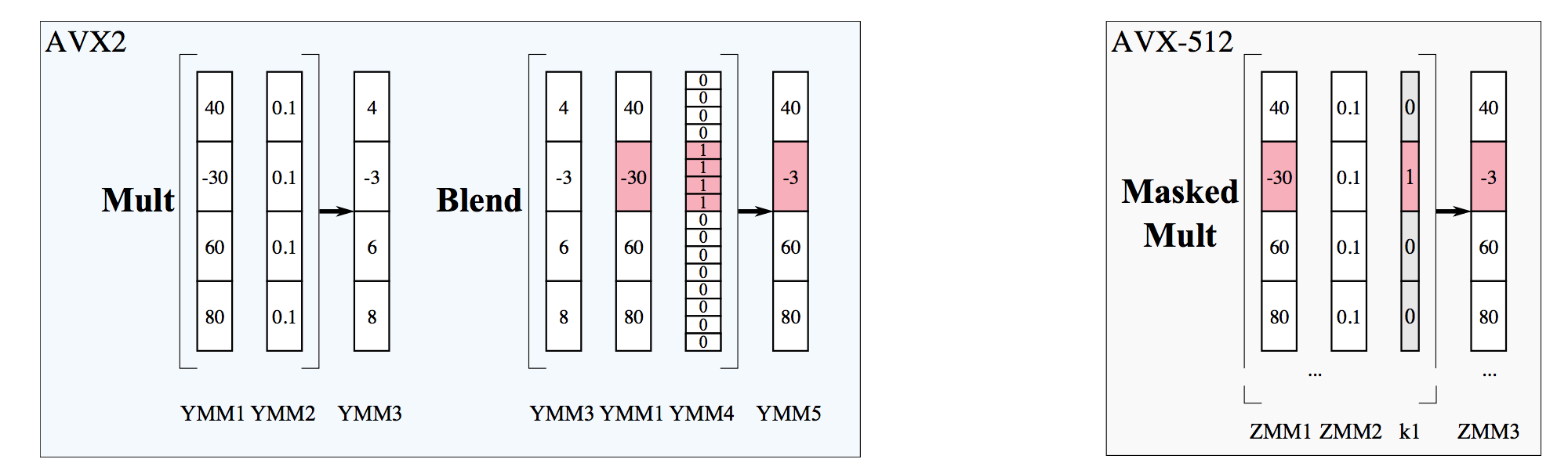

The figure below demonstrates the difference between the implementation of masking in AVX2 and AVX-512 for the kernel “if (B[i] < 0.0) B[i]*=0.1“.

Figure 5: Illustration of the difference between masking functionality in AVX2 and AVX-512.

In AVX2, the mask is stored in a YMM data register, while in AVX-512 it is stored in a bitmask register k1. AVX2 requires two operations: unmasked multiplication and blending, while AVX-512 uses only one masked multiplication. AVX2 uses five data registers, while AVX-512 uses three data registers and one bitmask register.

The listing below contains an example of a loop that the Intel compiler can vectorize with the help of masking.

void ConditionalLoop(double * restrict A, double * restrict B, double * restrict C) {

int i;

for( i = 0; i < 10000; i++ ) {

if(B[i] > 0.0)

A[i] *= B[i];

else

A[i] += B[i];

}

}Listing 20: Masking example.

The listing below shows the assembly for this code compiled for the Broadwell target. The compiler unrolls the loop and for each vector iteration implements arithmetics (vmulpd, vaddpd), comparison (vcmpgtpd), and masking (vxorpd, vandpd and vblendvpd).

..B1.11: # Preds ..B1.11 ..B1.10

vmovupd (%rsi,%rdx,8), %ymm5 #4.8

vmovupd 32(%rsi,%rdx,8), %ymm13 #4.8

vmovupd 32(%rdi,%rdx,8), %ymm12 #7.7

vmovupd (%rdi,%rdx,8), %ymm4 #7.7

vcmpgtpd %ymm1, %ymm5, %ymm8 #4.15

vmulpd %ymm13, %ymm12, %ymm15 #5.7

vmulpd %ymm5, %ymm4, %ymm7 #5.7

vxorpd %ymm8, %ymm0, %ymm2 #4.15

vandpd %ymm2, %ymm5, %ymm3 #7.15

vcmpgtpd %ymm1, %ymm13, %ymm2 #4.15

vaddpd %ymm3, %ymm4, %ymm6 #7.7

vxorpd %ymm2, %ymm0, %ymm10 #4.15

vandpd %ymm10, %ymm13, %ymm11 #7.15

vaddpd %ymm11, %ymm12, %ymm14 #7.7

vblendvpd %ymm8, %ymm7, %ymm6, %ymm9 #7.7

vblendvpd %ymm2, %ymm15, %ymm14, %ymm3 #7.7

vmovupd 96(%rsi,%rdx,8), %ymm15 #4.8

vmovupd 64(%rsi,%rdx,8), %ymm7 #4.8

vmovupd 64(%rdi,%rdx,8), %ymm6 #7.7

vmovupd 96(%rdi,%rdx,8), %ymm14 #7.7

vmovupd %ymm9, (%rdi,%rdx,8) #5.7

vmovupd %ymm3, 32(%rdi,%rdx,8) #5.7

vmulpd %ymm7, %ymm6, %ymm9 #5.7

vcmpgtpd %ymm1, %ymm15, %ymm2 #4.15

vcmpgtpd %ymm1, %ymm7, %ymm10 #4.15

vxorpd %ymm2, %ymm0, %ymm12 #4.15

vandpd %ymm12, %ymm15, %ymm13 #7.15

vxorpd %ymm10, %ymm0, %ymm4 #4.15

vaddpd %ymm13, %ymm14, %ymm12 #7.7

vmulpd %ymm15, %ymm14, %ymm13 #5.7

vandpd %ymm4, %ymm7, %ymm5 #7.15

vaddpd %ymm5, %ymm6, %ymm8 #7.7

vblendvpd %ymm2, %ymm13, %ymm12, %ymm3 #7.7

vblendvpd %ymm10, %ymm9, %ymm8, %ymm11 #7.7

vmovupd %ymm3, 96(%rdi,%rdx,8) #5.7

vmovupd %ymm11, 64(%rdi,%rdx,8) #5.7

addq $16, %rdx #3.3

cmpq %rax, %rdx #3.3

jb ..B1.11 # Prob 99% #3.3Listing 21: Lines of assembly code for compiling masking example on Broadwell.

The listing below shows the optimization report for the Broadwell target.

LOOP BEGIN at MA.c(3,3)

remark #15300: LOOP WAS VECTORIZED

remark #15448: unmasked aligned unit stride loads: 1

remark #15449: unmasked aligned unit stride stores: 1

remark #15450: unmasked unaligned unit stride loads: 1

remark #15476: scalar cost: 20

remark #15477: vector cost: 5.500

remark #15478: estimated potential speedup: 3.620

remark #25015: Estimate of max trip count of loop=625

LOOP ENDListing 22: Abridged optimization report for masking example on Broadwell.

For Skylake, the compiler produces a simpler assembly as shown in the listing below.

..B1.8: # Preds ..B1.8 ..B1.7

vmovups (%rsi,%rcx,8), %zmm3 #4.8

vmovups 64(%rsi,%rcx,8), %zmm7 #4.8

vmovups 128(%rsi,%rcx,8), %zmm11 #4.8

vmovups 192(%rsi,%rcx,8), %zmm15 #4.8

vmovups (%rdi,%rcx,8), %zmm2 #7.7

vmovups 64(%rdi,%rcx,8), %zmm6 #7.7

vmovups 128(%rdi,%rcx,8), %zmm10 #7.7

vmovups 192(%rdi,%rcx,8), %zmm14 #7.7

vcmppd $6, %zmm0, %zmm3, %k2 #4.15

vcmppd $6, %zmm0, %zmm7, %k4 #4.15

vcmppd $6, %zmm0, %zmm11, %k6 #4.15

knotw %k2, %k1 #7.15

knotw %k4, %k3 #7.15

knotw %k6, %k5 #7.15

vmovapd %zmm3, %zmm1{%k1}{z} #7.15

vcmppd $6, %zmm0, %zmm15, %k1 #4.15

vaddpd %zmm1, %zmm2, %zmm4 #7.7

knotw %k1, %k7 #7.15

vmulpd %zmm3, %zmm2, %zmm4{%k2} #5.7

vmovapd %zmm7, %zmm5{%k3}{z} #7.15

vmovapd %zmm11, %zmm9{%k5}{z} #7.15

vmovapd %zmm15, %zmm13{%k7}{z} #7.15

vaddpd %zmm5, %zmm6, %zmm8 #7.7

vaddpd %zmm9, %zmm10, %zmm12 #7.7

vaddpd %zmm13, %zmm14, %zmm16 #7.7

vmulpd %zmm7, %zmm6, %zmm8{%k4} #5.7

vmulpd %zmm11, %zmm10, %zmm12{%k6} #5.7

vmulpd %zmm15, %zmm14, %zmm16{%k1} #5.7

vmovupd %zmm4, (%rdi,%rcx,8) #5.7

vmovupd %zmm8, 64(%rdi,%rcx,8) #5.7

vmovupd %zmm12, 128(%rdi,%rcx,8) #5.7

vmovupd %zmm16, 192(%rdi,%rcx,8) #5.7

addq $32, %rcx #3.3

cmpq %rdx, %rcx #3.3

jb ..B1.8 # Prob 99% #3.3Listing 23: Lines of assembly code for compiling masking example on Skylake.

This AVX-512 code writes the result of the comparison in the if-statement condition (vcmppd) into masks k2, k4, k6 and k1, and the multiplication instruction vmulpd uses this bitmask. The intermediate store instructions vmovapd use the inverse of the masks stored in k1, k3, k5 and k7 (similar to vpblendvpd in AVX2), and eventually the data is written to memory by vmovupd without masking.

The optimization report for the Skylake target is shown in the listing below.

LOOP BEGIN at MA.c(3,3)

remark #15300: LOOP WAS VECTORIZED

remark #15448: unmasked aligned unit stride loads: 1

remark #15449: unmasked aligned unit stride stores: 1

remark #15450: unmasked unaligned unit stride loads: 1

remark #15476: scalar cost: 20

remark #15477: vector cost: 2.870

remark #15478: estimated potential speedup: 6.930

remark #25015: Estimate of max trip count of loop=312

LOOP ENDListing 24: Abridged optimization report for masking example on Skylake.

In addition to loop unrolling by a factor of 4, you can notice that instructions in the unrolled iterations are permuted. Eight loads (vmovups) are followed by three comparisons (vcmppd) and bitmask manipulation (knotw), and the fourth comparison comes later. Similar permutations are seen in masked additions (vaddpd) and multiplications (vmulpd). The compiler does not report the motivation for permutation. However, we speculate that it optimally packs the pipeline of the Skylake architecture core.

Interestingly, when we compiled the code for Knights Landing with -xMIC-AVX512, the compiler chose an unroll factor of 2 instead of 4 and also changed the instruction permutation strategy. The resulting assembly for KNL is shown in the listing below.

..B1.8: # Preds ..B1.8 ..B1.7

vcmppd $9, (%rsi,%rcx,8), %zmm0, %k2 #4.15 c1

vcmppd $9, 64(%rsi,%rcx,8), %zmm0, %k4 #4.15 c1

knotw %k2, %k1 #7.15 c3

knotw %k4, %k3 #7.15 c3

vmovups (%rdi,%rcx,8), %zmm2 #7.7 c3

vmovups 64(%rdi,%rcx,8), %zmm5 #7.7 c3

vmovupd (%rsi,%rcx,8), %zmm1{%k1}{z} #7.15 c9 stall 2

vmovupd 64(%rsi,%rcx,8), %zmm4{%k3}{z} #7.15 c9

vaddpd (%rdi,%rcx,8), %zmm1, %zmm3 #7.7 c15 stall 2

vaddpd 64(%rdi,%rcx,8), %zmm4, %zmm6 #7.7 c15

vmulpd (%rsi,%rcx,8), %zmm2, %zmm3{%k2} #5.7 c21 stall 2

vmovupd %zmm3, (%rdi,%rcx,8) #5.7 c27 stall 2

vmulpd 64(%rsi,%rcx,8), %zmm5, %zmm6{%k4} #5.7 c27

vmovupd %zmm6, 64(%rdi,%rcx,8) #5.7 c33 stall 2

addq $16, %rcx #3.3 c33

cmpq %rdx, %rcx #3.3 c35

jb ..B1.8 # Prob 99% #3.3 c37Listing 25: Lines of assembly code for compiling masking example for Knights Landing.

The differences between AVX-512 code for Skylake and Knights Landing further demonstrate that in the process of automatic vectorization, the compiler uses not only the instruction set of the target architecture but also the low-level technical details of the architecture organization, particularly pipelining.

2.5. AVX-512F: Compress/Expand

AVX-512F in Skylake enables the vectorization of the data compress and expand operations. The vcompress instruction reads elements from the input register using a read mask and writes them contiguously into the output register. The vexpand instruction does the opposite: reads adjacent elements from the input register and stores them in the destination register according to the write mask. These instructions are useful for compressing a subset of data into a compact new container or doing the opposite.

The listing below shows an example for compressing data with non-zero values.

void Compress(float* restrict A, float* restrict B) {

int i;

#pragma nounroll // Not needed in real-world calculation; used here to simplify assembly

for( i = 0; i < 8000; i++ ) {

if(A[i] != 0)

B[j++] = A[i];

}

}Listing 26: Data compress example.

The compress operation in this code is implemented differently for AVX2 and AVX-512. In AVX2, the compiler does not vectorize the data compression loop. The listing below shows the AVX2 assembly.

..B1.4: # Preds ..B1.6 ..B1.3

# Execution count [8.00e+03]

vmovss (%rdi,%rax,4), %xmm1 #5.8

vucomiss %xmm0, %xmm1 #5.16

jp ..B1.5 # Prob 0% #5.16

je ..B1.6 # Prob 16% #5.16

# LOE rax rdx rbx rbp rsi rdi r12 r13 r14 r15 xmm0 xmm1

..B1.5: # Preds ..B1.4

# Execution count [6.72e+03]

vmovss %xmm1, (%rsi,%rdx,4) #6.7

incq %rdx #6.9

# LOE rax rdx rbx rbp rsi rdi r12 r13 r14 r15 xmm0

..B1.6: # Preds ..B1.5 ..B1.4

# Execution count [8.00e+03]

incq %rax #4.25

cmpq $8000, %rax #4.19

jl ..B1.4 # Prob 99% #4.19Listing 27: Lines from assembly code for compiling data compress example on Broadwell.

The loop processes one element of A at a time because vmovss is a scalar load operation. The vucomiss operation performs a comparison of A[i] with 0, and the subsequent code either stores it in B (block B1.5) or skips it (block B1.6).

The optimization report in the listing below shows that vector dependence prevents vectorization, and the loop uses scalar instructions.

LOOP BEGIN at compress.c(4,3)

remark #15344: loop was not vectorized: vector dependence prevents vectorization

remark #15346: vector dependence: assumed FLOW dependence between j (6:9) and j (6:7)

remark #15346: vector dependence: assumed ANTI dependence between j (6:7) and j (6:9)

LOOP ENDListing 28: Lines from optimization report for data compress example on Broadwell.

When we compiled the code for Skylake (AVX-512), the loop was vectorized. The listing below shows the assembly. The compress operation is performed directly using the vcompressps instruction. It copies and compresses up to 16 single precision floating-point values into the destination operand using the source operand ZMM1 and the opmask register k1. The opmask register selects the elements from the source that get copied and compressed.

..B1.16: # Preds ..B1.18 ..B1.15

# Execution count [0.00e+00]

vmovups (%rdi,%rax,4), %zmm1 #5.8

vpxord %zmm0, %zmm0, %zmm0 #5.16

vcmpps $4, %zmm0, %zmm1, %k1 #5.16

kmovw %k1, %r9d #5.16

testl %r9d, %r9d #5.16

je ..B1.18 # Prob 20% #5.16

# LOE rax rdx rbx rbp rdi r8 r12 r13 r14 r15 ecx esi r9d zmm1 k1

..B1.17: # Preds ..B1.16

# Execution count [8.00e+03]

movslq %ecx, %rcx #6.7

popcnt %r9d, %r9d #6.7

vcompressps %zmm1, (%r8,%rcx,4){%k1} #6.7

addl %r9d, %ecx #6.7

# LOE rax rdx rbx rbp rdi r8 r12 r13 r14 r15 ecx esi

..B1.18: # Preds ..B1.17 ..B1.16

# Execution count [8.00e+03]

addq $16, %rax #4.3

cmpq %rdx, %rax #4.3

jb ..B1.16 # Prob 99% #4.3Listing 29: Lines from assembly code for compiling data compress example on Skylake.

The optimization report in the listing below shows that the loop was vectorized and that the compiler detects one compress operation. The estimated potential speedup is 22.0 compared to scalar code.

LOOP BEGIN at compress.c(4,3)

remark #15300: LOOP WAS VECTORIZED

remark #15442: entire loop may be executed in remainder

remark #15448: unmasked aligned unit stride loads: 1

remark #15457: masked unaligned unit stride stores: 1

remark #15475: --- begin vector cost summary ---

remark #15476: scalar cost: 18

remark #15477: vector cost: 0.810

remark #15478: estimated potential speedup: 22.000

remark #15488: --- end vector cost summary ---

remark #15497: vector compress: 1

remark #25015: Estimate of max trip count of loop=500

LOOP ENDListing 30: Lines from the optimization report for data compress example on Skylake.

2.6. AVX-512F: New Shuffle Instructions

AVX-512 on Skylake introduces a new set of shuffle instructions that allow you to rearrange elements in one or two source registers and write them to the destination register. These instructions are supported for different element sizes: byte, word, double and quadword.

The listing below shows a function that performs a transposition of an 8×8 matrix of double precision floating-point numbers.

void Transpose8x8(double * restrict A, double * restrict B) {

int i, j;

for (i = 0; i < 8; i++)

for (j = 0; j < 8; j++)

A[i*8 + j] = B[j*8 + i];

}Listing 31: Matrix transposition example.

We compiled the code with AVX2 using the compilation line in the listing below.

icc PR.c -S -vec-threshold0 -xCORE-AVX2 -qopt-report=5 Listing 32: Compilation commands for matrix transposition example (Broadwell).

The optimization report in the listing below shows a potential speedup of 1.48 due to vectorization.

LOOP BEGIN at PR.c(4,5)

remark #15389: vectorization support: reference A[i*8+j] has unaligned access [ PR.c(5,7) ]

remark #15381: vectorization support: unaligned access used inside loop body

remark #15328: vectorization support: non-unit strided load was emulated for the variable <B[j*8+i]>, stride is 8 [ PR.c(5,20) ]

remark #15305: vectorization support: vector length 4

remark #15427: loop was completely unrolled

remark #15399: vectorization support: unroll factor set to 2

remark #15309: vectorization support: normalized vectorization overhead 0.125

remark #15300: LOOP WAS VECTORIZED

remark #15451: unmasked unaligned unit stride stores: 1

remark #15452: unmasked strided loads: 1

remark #15475: --- begin vector cost summary ---

remark #15476: scalar cost: 5

remark #15477: vector cost: 3.000

remark #15478: estimated potential speedup: 1.480

remark #15488: --- end vector cost summary ---

LOOP ENDListing 33: Optimization report for matrix transposition example on Broadwell.

Even though Broadwell can run this code with vector instructions, it is important to see how exactly the Intel compiler achieves this in AVX2. The listing below shows the assembly code for the matrix transpose example compiled with -xCORE-AVX2. This code shuffles elements using multiple vmovups and vinsertf128 instructions followed by vunpckhpd instruction. Instruction vunpckhpd unpacks and interleaves double precision floating-point elements from the source operand into the destination operand.

vmovups 16(%rsi), %xmm7 #5.20

vmovups 80(%rsi), %xmm9 #5.20

vmovups 64(%rsi), %xmm8 #5.20

vmovups (%rsi), %xmm6 #5.20

vmovups 256(%rsi), %xmm15 #5.20

vmovups 272(%rsi), %xmm1 #5.20

vmovups 320(%rsi), %xmm2 #5.20

vmovups 336(%rsi), %xmm0 #5.20

vinsertf128 $1, 144(%rsi), %ymm7, %ymm12 #5.20

vinsertf128 $1, 208(%rsi), %ymm9, %ymm13 #5.20

vunpcklpd %ymm13, %ymm12, %ymm5 #5.20

vmovupd %ymm5, 128(%rdi) #5.7

vmovups 96(%rsi), %xmm5 #5.20

vunpckhpd %ymm13, %ymm12, %ymm3 #5.20

vmovupd %ymm3, 192(%rdi) #5.7

vmovups 32(%rsi), %xmm3 #5.20

vinsertf128 $1, 128(%rsi), %ymm6, %ymm10 #5.20

vinsertf128 $1, 192(%rsi), %ymm8, %ymm11 #5.20

vunpcklpd %ymm11, %ymm10, %ymm14 #5.20

vunpckhpd %ymm11, %ymm10, %ymm4 #5.20

vmovupd %ymm14, (%rdi) #5.7

vmovupd %ymm4, 64(%rdi) #5.7

vmovups 48(%rsi), %xmm4 #5.20

vmovups 112(%rsi), %xmm14 #5.20

vinsertf128 $1, 384(%rsi), %ymm15, %ymm6 #5.20

vinsertf128 $1, 400(%rsi), %ymm1, %ymm8 #5.20

vinsertf128 $1, 448(%rsi), %ymm2, %ymm7 #5.20

vinsertf128 $1, 464(%rsi), %ymm0, %ymm9 #5.20

vunpcklpd %ymm7, %ymm6, %ymm10 #5.20

vunpckhpd %ymm7, %ymm6, %ymm11 #5.20

vunpcklpd %ymm9, %ymm8, %ymm12 #5.20

vunpckhpd %ymm9, %ymm8, %ymm13 #5.20

vmovups 304(%rsi), %xmm6 #5.20

vmovups 352(%rsi), %xmm7 #5.20

vmovups 368(%rsi), %xmm8 #5.20

vmovupd %ymm10, 32(%rdi) #5.7

vmovupd %ymm11, 96(%rdi) #5.7

vmovupd %ymm12, 160(%rdi) #5.7

vmovupd %ymm13, 224(%rdi) #5.7

vinsertf128 $1, 224(%rsi), %ymm5, %ymm2 #5.20

vmovups 288(%rsi), %xmm5 #5.20

vinsertf128 $1, 160(%rsi), %ymm3, %ymm1 #5.20

vinsertf128 $1, 176(%rsi), %ymm4, %ymm0 #5.20

vinsertf128 $1, 240(%rsi), %ymm14, %ymm3 #5.20

vunpcklpd %ymm2, %ymm1, %ymm4 #5.20

vinsertf128 $1, 416(%rsi), %ymm5, %ymm9 #5.20

vinsertf128 $1, 432(%rsi), %ymm6, %ymm11 #5.20

vinsertf128 $1, 480(%rsi), %ymm7, %ymm10 #5.20

vinsertf128 $1, 496(%rsi), %ymm8, %ymm12 #5.20

vunpckhpd %ymm2, %ymm1, %ymm1 #5.20

vunpcklpd %ymm3, %ymm0, %ymm2 #5.20

vunpckhpd %ymm3, %ymm0, %ymm0 #5.20

vmovupd %ymm4, 256(%rdi) #5.7

vmovupd %ymm1, 320(%rdi) #5.7

vmovupd %ymm2, 384(%rdi) #5.7

vmovupd %ymm0, 448(%rdi) #5.7

vunpcklpd %ymm10, %ymm9, %ymm13 #5.20

vunpckhpd %ymm10, %ymm9, %ymm15 #5.20

vunpcklpd %ymm12, %ymm11, %ymm3 #5.20

vunpckhpd %ymm12, %ymm11, %ymm4 #5.20

vmovupd %ymm13, 288(%rdi) #5.7

vmovupd %ymm15, 352(%rdi) #5.7

vmovupd %ymm3, 416(%rdi) #5.7

vmovupd %ymm4, 480(%rdi) #5.7Listing 34: Lines from assembly code for compiling the matrix transposition example for Broadwell.

In the case of Skylake, the compilation line in the listing below is used to compile the matrix transposition example code and produce an assembly file and an optimization report.

icc PR.c -S -vec-threshold0 -xCORE-AVX512 -qopt-report=5 Listing 35: Compilation commands for matrix transposition example (Skylake).

The optimization report in the listing below confirms that the loop was vectorized and shows an estimated potential speedup of 1.9 due to vectorization.

LOOP BEGIN at PR.c(4,5)

remark #15389: vectorization support: reference A[i*8+j] has unaligned access [ PR.c(5,7) ]

remark #15381: vectorization support: unaligned access used inside loop body

remark #15415: vectorization support: non-unit strided load was generated for the variable <B[j*8+i]>, stride is 8 [ PR.c(5,20) ]

remark #15305: vectorization support: vector length 8

remark #15427: loop was completely unrolled

remark #15309: vectorization support: normalized vectorization overhead 0.167

remark #15300: LOOP WAS VECTORIZED

remark #15451: unmasked unaligned unit stride stores: 1

remark #15452: unmasked strided loads: 1

remark #15475: --- begin vector cost summary ---

remark #15476: scalar cost: 5

remark #15477: vector cost: 2.250

remark #15478: estimated potential speedup: 1.900

remark #15488: --- end vector cost summary ---

LOOP ENDListing 36: Optimization report for matrix transposition example on Skylake.

On Skylake, vectorization takes advantage of the new shuffle instructions. The listing below shows the assembly for the matrix transposition example with the new AVX-512 instructions vpermt2q and vpermi2q. These instructions permute double precision floating-point numbers from ZMM registers and store the result back into the destination register. The following step is to blend and align the permuted data before storing it back. Instruction vpblendmq blends elements from ZMM registers using the opmask registers, and valignd merges vector registers.

vmovdqu32 (%rsi), %zmm20 #5.20

vmovdqu32 64(%rsi), %zmm7 #5.20

vmovdqu32 128(%rsi), %zmm16 #5.20

vmovdqu32 192(%rsi), %zmm9 #5.20

vmovdqu32 256(%rsi), %zmm19 #5.20

vmovdqu32 320(%rsi), %zmm11 #5.20

vmovdqu32 384(%rsi), %zmm18 #5.20

vmovdqu32 448(%rsi), %zmm13 #5.20

vmovdqu32 .L_2il0floatpacket.0(%rip), %zmm5 #5.20

vmovdqu32 .L_2il0floatpacket.1(%rip), %zmm2 #5.20

vmovdqu32 .L_2il0floatpacket.2(%rip), %zmm4 #5.20

vmovdqu32 .L_2il0floatpacket.3(%rip), %zmm3 #5.20

vmovdqu32 .L_2il0floatpacket.5(%rip), %zmm6 #5.20

vmovdqu32 .L_2il0floatpacket.6(%rip), %zmm8 #5.20

vmovdqu32 .L_2il0floatpacket.7(%rip), %zmm10 #5.20

vmovdqu32 .L_2il0floatpacket.8(%rip), %zmm12 #5.20

vmovdqu32 .L_2il0floatpacket.4(%rip), %zmm17 #5.20

vpermi2q %zmm7, %zmm20, %zmm5 #5.20

vpermi2q %zmm9, %zmm16, %zmm2 #5.20

vpermi2q %zmm11, %zmm19, %zmm4 #5.20

vpermi2q %zmm13, %zmm18, %zmm3 #5.20

vpermt2q %zmm7, %zmm6, %zmm20 #5.20

vpermt2q %zmm9, %zmm8, %zmm16 #5.20

vpermt2q %zmm11, %zmm10, %zmm19 #5.20

vpermt2q %zmm13, %zmm12, %zmm18 #5.20

kmovw %eax, %k1 #5.20

vpblendmq %zmm2, %zmm5, %zmm1{%k1} #5.20

movl $240, %eax #5.20

vpblendmq %zmm3, %zmm4, %zmm0{%k1} #5.20

vpblendmq %zmm16, %zmm20, %zmm15{%k1} #5.20

vpblendmq %zmm18, %zmm19, %zmm14{%k1} #5.20

vpermt2q %zmm2, %zmm17, %zmm5 #5.20

vpermt2q %zmm3, %zmm17, %zmm4 #5.20

vpermt2q %zmm16, %zmm17, %zmm20 #5.20

vpermt2q %zmm18, %zmm17, %zmm19 #5.20

valignd $8, %zmm1, %zmm0, %zmm22 #5.20

valignd $8, %zmm5, %zmm4, %zmm24 #5.20

valignd $8, %zmm15, %zmm14, %zmm26 #5.20

valignd $8, %zmm20, %zmm19, %zmm28 #5.20

vmovupd %zmm22, 64(%rdi) #5.7

vmovupd %zmm24, 192(%rdi) #5.7

vmovupd %zmm26, 320(%rdi) #5.7

vmovupd %zmm28, 448(%rdi) #5.7

kmovw %eax, %k2 #5.20

vpblendmq %zmm0, %zmm1, %zmm21{%k2} #5.20

vpblendmq %zmm4, %zmm5, %zmm23{%k2} #5.20

vpblendmq %zmm14, %zmm15, %zmm25{%k2} #5.20

vpblendmq %zmm19, %zmm20, %zmm27{%k2} #5.20

vmovupd %zmm21, (%rdi) #5.7

vmovupd %zmm23, 128(%rdi) #5.7

vmovupd %zmm25, 256(%rdi) #5.7

vmovupd %zmm27, 384(%rdi) #5.7Listing 37: Lines from assembly code for transposition example on Skylake.

2.7. AVX-512F: Gather/Scatter

AVX2 includes a gather operation, which loads vector elements from non-adjacent memory locations into a contiguous 256-bit YMM register. AVX-512F introduces gather with 512-bit registers and also a scatter instruction, which stores elements from a contiguous vector into non-adjacent memory locations.

The listing below shows memory access with non-contiguous memory access, where the code reads a contiguous array Y and scatters its elements into array X with a fixed stride of 8.

void Scatter( double* restrict X, double* restrict Y ) {

int i;

for(i = 0; i < 10000; i++)

X[i*8] = Y[i];

}Listing 38: Scatter example.

In the assembly compiled for Broadwell is shown in the listing below.

..B1.7: # Preds ..B1.7 ..B1.6

# Execution count [1.00e+04]

vmovupd (%rsi,%r8,8), %ymm0 #4.14

movl %r8d, %ecx #4.5

vmovupd 32(%rsi,%r8,8), %ymm2 #4.14

shll $3, %ecx #4.5

addq $8, %r8 #3.3

movslq %ecx, %rcx #4.5

cmpq %rdx, %r8 #3.3

vextractf128 $1, %ymm0, %xmm1 #4.5

vextractf128 $1, %ymm2, %xmm3 #4.5

vmovsd %xmm0, (%rdi,%rcx,8) #4.5

vmovhpd %xmm0, 64(%rdi,%rcx,8) #4.5

vmovsd %xmm1, 128(%rdi,%rcx,8) #4.5

vmovhpd %xmm1, 192(%rdi,%rcx,8) #4.5

vmovsd %xmm2, 256(%rdi,%rcx,8) #4.5

vmovhpd %xmm2, 320(%rdi,%rcx,8) #4.5

vmovsd %xmm3, 384(%rdi,%rcx,8) #4.5

vmovhpd %xmm3, 448(%rdi,%rcx,8) #4.5

jb ..B1.7 # Prob 99% #3.3Listing 39: Lines from the assembly code for the scatter example on Broadwell.

This listing demonstrates that the scatter operation is performed using multiple vextractf128 and scalar vmovsd/vmovhpd instructions. First, vmovupd loads four consecutive values from Y into a YMM register. Then vextractf128 stores half of that YMM register into a new XMM register. vmovsd stores the lower half of the XMM register (which amounts to one 64-bit double precision element) into array X, vmovhpd stores the upper half in the next position in array X. Then the procedure is repeated until all four loaded values are scattered.

The optimization report in the listing below confirms that the loop was vectorized and mentions that a non-unit stride store (i.e., a scatter operation) was emulated for the array A.

LOOP BEGIN at scatter.c(3,3)

remark #15388: vectorization support: reference Y[i] has aligned access [ scatter.c(4,14) ]

remark #15329: vectorization support: non-unit strided store was emulated for the variable <X[i*8]>, stride is 8 [ scatter.c(4,5) ]

remark #15305: vectorization support: vector length 8

remark #15309: vectorization support: normalized vectorization overhead 0.435

remark #15300: LOOP WAS VECTORIZED

remark #15442: entire loop may be executed in remainder

remark #15448: unmasked aligned unit stride loads: 1

remark #15453: unmasked strided stores: 1

remark #15475: --- begin vector cost summary ---

remark #15476: scalar cost: 5

remark #15477: vector cost: 2.870

remark #15478: estimated potential speedup: 1.730

remark #15488: --- end vector cost summary ---

remark #25015: Estimate of max trip count of loop=1250

LOOP ENDListing 40: Optimization report for the scatter example on Broadwell.

The code compiled for Skylake (the listing below) shows that the compiler used the instruction vscatterdpd. This instruction belongs to AVX-512F module. It scatters a register of double precision floating-point values to non-contiguous memory locations. The base address is YMM0, the offsets are stored in r9, and the write mask k1/k2 has all bits set to 1.

..B1.7: # Preds ..B1.7 ..B1.6

# Execution count [1.00e+04]

vpcmpeqb %xmm0, %xmm0, %k1 #4.5

movl %r8d, %ecx #4.5

vmovups (%rsi,%r8,8), %zmm1 #4.14

vmovups 64(%rsi,%r8,8), %zmm2 #4.14

shll $3, %ecx #4.5

addq $16, %r8 #3.3

movslq %ecx, %rcx #4.5

lea (%rdi,%rcx,8), %r9 #4.5

vscatterdpd %zmm1, (%r9,%ymm0,8){%k1} #4.5

vpcmpeqb %xmm0, %xmm0, %k2 #4.5

vscatterdpd %zmm2, 512(%r9,%ymm0,8){%k2} #4.5

cmpq %rdx, %r8 #3.3

jb ..B1.7 # Prob 99% #3.3Listing 41: Lines from the assembly code for scatter example on Skylake.

The optimization report in the listing below also shows that the loop was vectorized, but this time non-unit stride store was generated, rather than emulated for access to array A.

LOOP BEGIN at scatter.c(3,3)

remark #15388: vectorization support: reference Y[i] has aligned access [ scatter.c(4,14) ]

remark #15416: vectorization support: non-unit strided store was generated for the variable <X[i*8]>, stride is 8 [ scatter.c(4,5) ]

remark #15305: vectorization support: vector length 16

remark #15309: vectorization support: normalized vectorization overhead 0.270

remark #15300: LOOP WAS VECTORIZED

remark #15442: entire loop may be executed in remainder

remark #15448: unmasked aligned unit stride loads: 1

remark #15453: unmasked strided stores: 1

remark #15475: --- begin vector cost summary ---

remark #15476: scalar cost: 5

remark #15477: vector cost: 2.310

remark #15478: estimated potential speedup: 2.150

remark #15488: --- end vector cost summary ---

remark #25015: Estimate of max trip count of loop=625

LOOP ENDListing 42: Optimization report for scatter example on Skylake.

When memory access has good locality, the cost of gather/scatter is high, and the compiler may replace a scatter operation with permutations or shuffles followed by unit-stride access. For example, the code in the listing below accesses memory with a stride of 2. For this pattern, in every cache line, 1 out of 2 elements is written. Stride 2 has better locality than stride 8, where only 1 element out of 8 is written.

void Scatter2( double* restrict X, double* restrict Y ) {

int i;

for(i = 0; i < 10000; i++)

X[i*2] = Y[i];

}Listing 43: Scatter with a small stride example.

AVX-512 assembly for this case is shown in the listing below. Instead of vgatherdpd, the compiler chose to use vexpandpd and vpermpd operations followed by unit-stride masked stores vmovupd.

..B1.7: # Preds ..B1.7 ..B1.6

# Execution count [1.00e+04]

vmovups (%rsi,%r8,8), %zmm2 #4.14

movl %r8d, %ecx #4.5

vmovups 64(%rsi,%r8,8), %zmm5 #4.14

vexpandpd %zmm2, %zmm1{%k1}{z} #4.5

vpermpd %zmm2, %zmm0, %zmm3 #4.5

vexpandpd %zmm5, %zmm4{%k1}{z} #4.5

vpermpd %zmm5, %zmm0, %zmm6 #4.5

addl %ecx, %ecx #4.5

addq $16, %r8 #3.3

movslq %ecx, %rcx #4.5

vmovupd %zmm1, (%rdi,%rcx,8){%k1} #4.5

vmovupd %zmm3, 64(%rdi,%rcx,8){%k1} #4.5

vmovupd %zmm4, 128(%rdi,%rcx,8){%k1} #4.5

vmovupd %zmm6, 192(%rdi,%rcx,8){%k1} #4.5

cmpq %rdx, %r8 #3.3

jb ..B1.7 # Prob 99% #3.3Listing 44: Assembly for the scatter example with a stride of 2 on Skylake.

2.8. AVX-512F: Embedded Broadcasting

Embedded broadcasting, a feature introduced in AVX-512, allows vector instructions to replicate scalar elements across vector lanes and use them as operands. Before AVX-512, broadcasting a scalar across vector lanes was possible, but it required an explicit broadcast operation, and its result occupied an additional vector register. Embedded broadcasting is supported for 32-bit and 64-bit scalars, which get replicated 16 or 8 times, respectively, but not for byte and word types. Because embedded broadcasting does not require an additional register to store the result, it is particularly useful when the register space is in short supply.

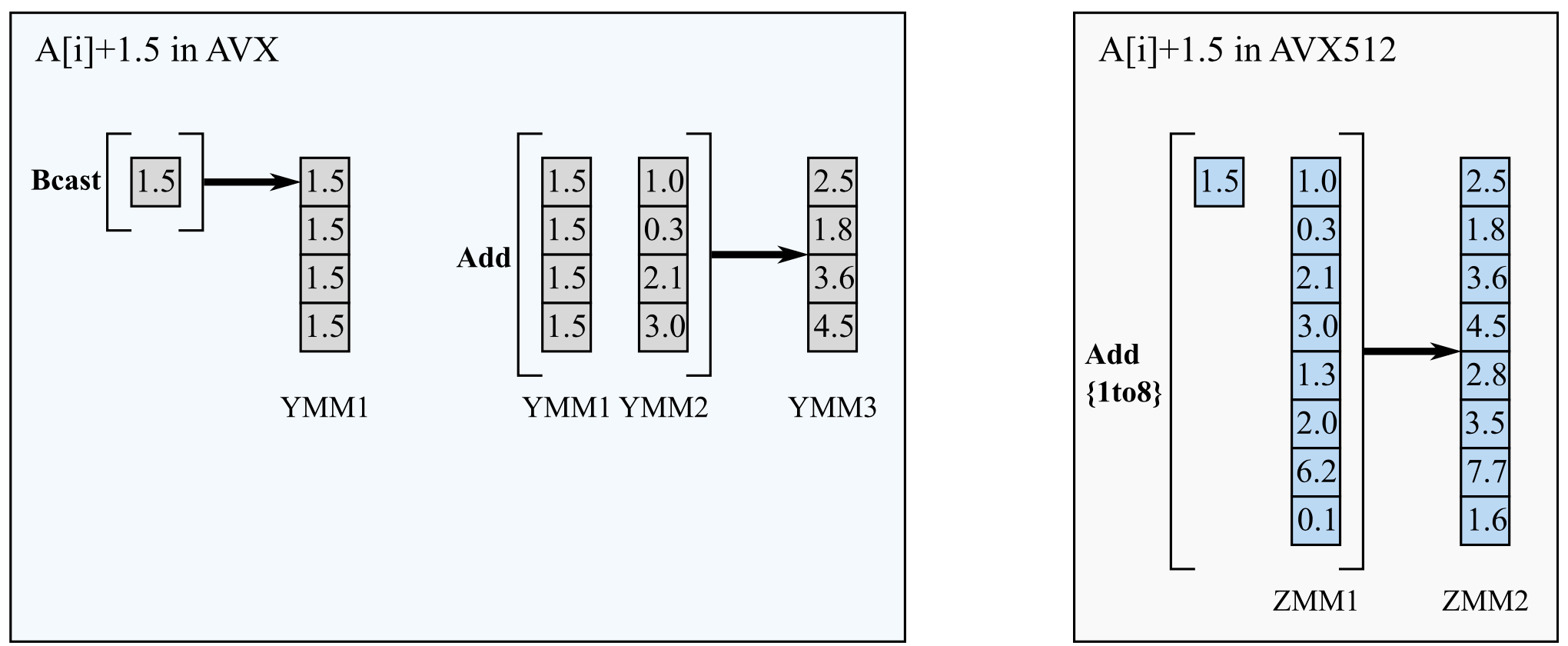

The figure below illustrates the difference between broadcast in AVX and AVX-512 for an example kernel A[i]+1.5, where the addition is vectorized in index i.

Figure 6: Broadcasting a scalar value across vector lanes in AVX and AVX-512.

The example in the listing below shows a case where embedded broadcasting may be used.

void Embedded_Broadcast (float * restrict A, float * restrict L, float * restrict U) {

int i, j, k;

int n = 16;

for ( i = 0; i < n; i++)

for ( k = 0; k < n; k++)

for ( j = 0; j < n; j++)

A[i*n + j] += L[i*n + k]*U[k*n + j];

}Listing 45: Matrix-matrix multiplication can benefit from embedded broadcast.

In this code, the innermost loop in j gets vectorized. The compiler expresses the multiplication and accumulation operations in the loop for 8 values (in AVX) or 16 values (in AVX-512) of j as an FMA instruction with three operands. Two of these operands are vectors with data loaded from A and U, and the third one has the scalar value L[i*n+k] in all vector lanes.

The listing below shows the assembly for the above example compiled for Broadwell.

..B1.2: # Preds ..B1.2 ..B1.1

# Execution count [4.56e+00]

vmovups 32(%rdi,%rax,4), %ymm4 #8.9

incb %dl #5.3

vmovups (%rdi,%rax,4), %ymm2 #8.9

vbroadcastss 16(%rsi,%rax,4), %ymm3 #8.23

vbroadcastss 12(%rsi,%rax,4), %ymm14 #8.23

vbroadcastss 8(%rsi,%rax,4), %ymm1 #8.23

vbroadcastss 4(%rsi,%rax,4), %ymm13 #8.23

vbroadcastss (%rsi,%rax,4), %ymm15 #8.23

vfmadd231ps -336(%rbp), %ymm3, %ymm4 #8.9[spill]

vfmadd231ps -720(%rbp), %ymm15, %ymm2 #8.9[spill]

vfmadd231ps -400(%rbp), %ymm14, %ymm4 #8.9[spill]

vfmadd231ps -464(%rbp), %ymm1, %ymm4 #8.9[spill]

vfmadd231ps -656(%rbp), %ymm13, %ymm4 #8.9[spill]

...

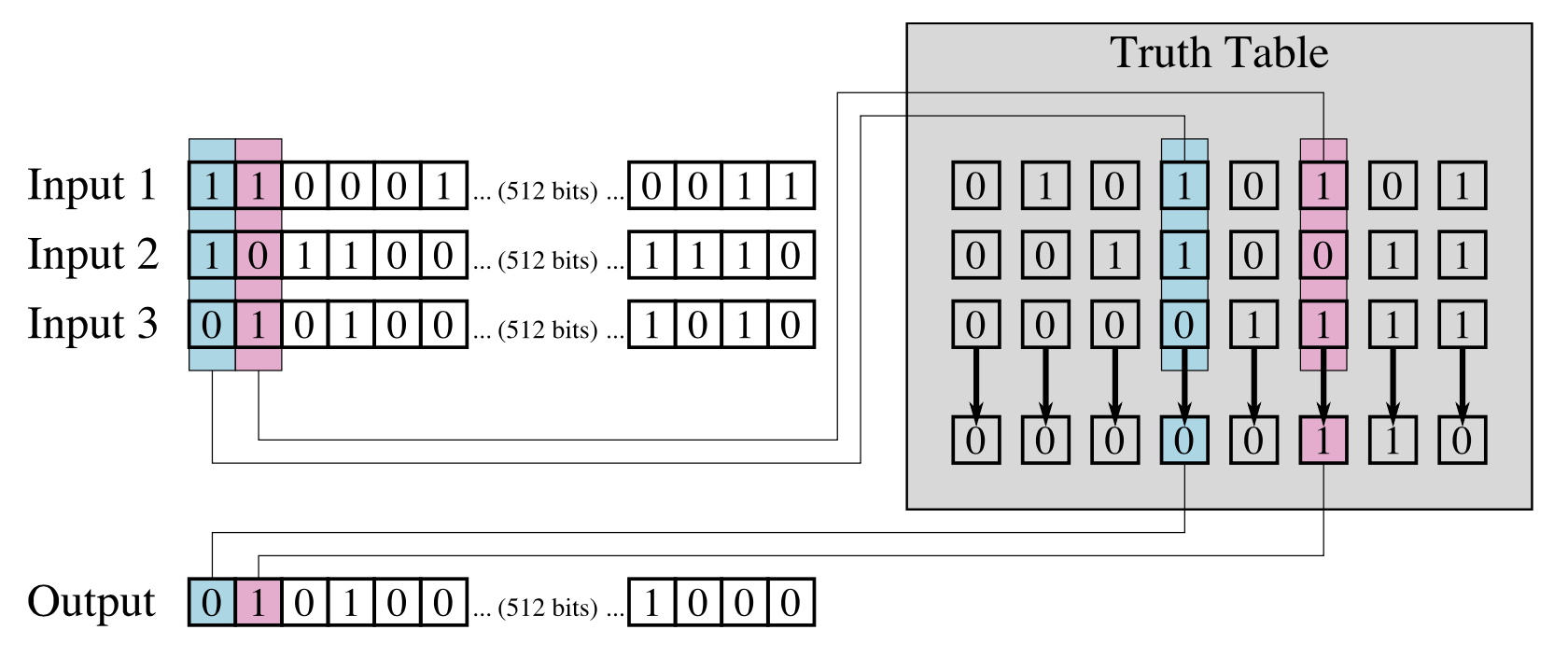

addq $16, %rax #5.3