A Survey and Benchmarks of Intel® Xeon® Gold and Platinum Processors

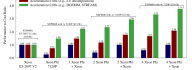

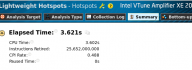

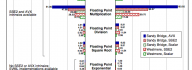

This paper provides quantitative guidelines and performance estimates for choosing a processor among the Platinum and Gold groups of the Intel Xeon Scalable family (formerly Skylake). The performance estimates are based on detailed technical specifications of the processors, including the efficiency of the Intel Turbo Boost technology. The achievable performance metrics are experimentally validated on several processor models with synthetic workloads. The best choice of the processor must take into account the nature of the application for which the processor is intended: multi-threading or multi-processing efficiency, support for vectorization, and dependence on memory bandwidth. Colfax-Xeon-Scalable.pdf (334 KB) Table of Contents 1. Which Xeon is Right for You? 2. CPU Comparison for Different Workloads 2.4. Bandwidth-Limited 3. Processor Choice Recommendations 4. Silver and Bronze Models 5. Large Memory, Integrated Fabric, Thermal Optimization 1. Which Xeon is Right for You? In 2017, the Intel Xeon Scalable processor family was released, featuring the Skylake architecture. [...]