HOW Series “Deep Dive”: Webinars on Performance Optimization – 2017 Edition

In a Nutshell

HOW Series “Deep Dive” is a free Web-based training on parallel programming and performance optimization on Intel architecture. The workshop includes 20 hours of instruction and code for hands-on exercises. This training is free to everyone thanks to Intel’s sponsorship.

You can access the video recordings of lectures, slides of presentations and code of practical exercises on this page using a free Colfax Research account.

To run the hands-on exercises, you will need a multi-core Intel architecture processor and the Intel C++ Compiler. You can get this compiler for 30 days at no cost using an evaluation license for Intel Parallel Studio XE.

Background

The HOW series ran in 2016-2017 as a live event. At that time, attendees were given remote access to a dedicated computing cluster to run programming exercises. Live broadcasts were accompanied by text Q&A chat with the instructor. Attendees of 6 or more broadcasts received a certificate of completion.

The HOW series ran in 2016-2017 as a live event. At that time, attendees were given remote access to a dedicated computing cluster to run programming exercises. Live broadcasts were accompanied by text Q&A chat with the instructor. Attendees of 6 or more broadcasts received a certificate of completion.

Currently, the HOW series is available only in self-study format. We can notify you of new courses if you sign up for our newsletter.

Why Attend the HOW Series

Here is what the HOW Series training will deliver for you:

Learn Modern Code

Are you realizing the payoff of parallel processing? Are you aware that without code optimization, computational applications may perform orders of magnitude worse than they are supposed to?

The Web-based HOW Series training provides the extensive knowledge needed to extract more of the parallel compute performance potential found in both Intel® Xeon® and Intel® Xeon Phi™ processors and coprocessors. Course materials and practical exercises are appropriate for developers beginning their journey to parallel programming, with enough detail to also cater to high-performance computing experts.

Jump to detailed descriptions of sessions to see what you will learn.

Practice New Skills

The HOW series is an experiential learning program because you get to see code optimization performed live and also get to practice it with your own hands. The workshop consists of instructional and hands-on self-study components:

The instructional part of each workshop consists of 10 lecture sessions. Each session presents 1 hour of theory and 1 hour of practical demonstrations. Lectures can be viewed offline as streaming video.

In the self-study part, you can run the exercises provided in the course on a multi-core Intel architecture processor, including the Intel Core (i3/i5/i7), Intel Xeon or Intel Xeon Phi x200 family CPUs.

What HOW Series Graduates are Saying:

“An un-parallel resource for learning trade skills of using Intel Xeon Phi platform for Parallel Computing. What makes this program all the more engaging is its focus on the ‘doing’ aspect as there is no substitute to ‘learning by doing’.”

Gaurav Verma

HOW attendee

“I am a physicist working with Monte Carlo models of particle transport on matter. My main working language is Fortran and I was able to follow the course without problems. The course gave me all the tools needed to start working with the Intel Xeon Phi MICs.”

Edgardo Doerner

HOW attendee

“This is a very well thought out course that gradually introduces the important concepts. The presenter and his team are experts with a lot of experience as evident from the lectures. The live demos for optimizations are very educative and well executed.”

Vikram K. Narayana

HOW attendee

Course Roadmap

Module I

Programming

Session 01

Intel Architecture and Modern Code

Session 02

Xeon Phi, Coprocessors, Omni-Path

Module II

Expressing Parallelism

Session 03

Expressing Parallelism with Vectors

Session 04

Multi-threading with OpenMP

Session 05

Distributed Computing, MPI

Module III

Optimization

Session 06

Optimization Overview: N-body

Session 07

Scalar Tuning, Vectorization

Session 08

Common Multi-threading Problems

Session 09

Multi-Threading, Memory Aspect

Session 10

Access to Caches and Memory

Instructor Bio

Andrey Vladimirov, Ph. D., is Head of HPC Research at Colfax International. His primary interest is the application of modern computing technologies to computationally demanding scientific problems. Prior to joining Colfax, A. Vladimirov was involved in computational astrophysics research at Stanford University, North Carolina State University, and the Ioffe Institute (Russia), where he studied cosmic rays, collisionless plasmas and the interstellar medium using computer simulations. He is the lead author of the book “Parallel Programming and Optimization with Intel Xeon Phi Coprocessors“, a regular contributor to the online resource Colfax Research, author of invited papers in “High Performance Parallelism Pearls” and Intel Xeon Phi Processor High Performance Programming: Knights Landing Edition, and an author or co-author of over 10 peer-reviewed publications in the fields of theoretical astrophysics and scientific computing.

Andrey Vladimirov, Ph. D., is Head of HPC Research at Colfax International. His primary interest is the application of modern computing technologies to computationally demanding scientific problems. Prior to joining Colfax, A. Vladimirov was involved in computational astrophysics research at Stanford University, North Carolina State University, and the Ioffe Institute (Russia), where he studied cosmic rays, collisionless plasmas and the interstellar medium using computer simulations. He is the lead author of the book “Parallel Programming and Optimization with Intel Xeon Phi Coprocessors“, a regular contributor to the online resource Colfax Research, author of invited papers in “High Performance Parallelism Pearls” and Intel Xeon Phi Processor High Performance Programming: Knights Landing Edition, and an author or co-author of over 10 peer-reviewed publications in the fields of theoretical astrophysics and scientific computing.

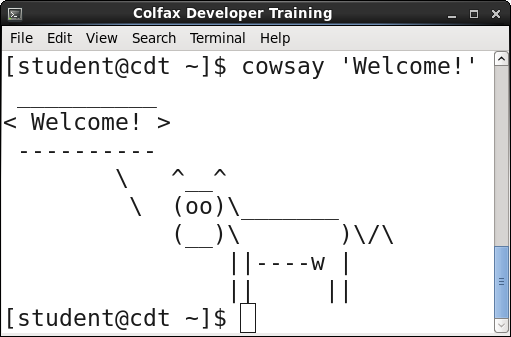

Prerequisites

We assume that you know the fundamentals of programming in C or C++ in Linux. If you are not familiar with Linux, read a basic tutorial such as this one. We assume that you know how to use a text-based terminal to manipulate files, edit code and compile applications.

We assume that you know the fundamentals of programming in C or C++ in Linux. If you are not familiar with Linux, read a basic tutorial such as this one. We assume that you know how to use a text-based terminal to manipulate files, edit code and compile applications.

Remote Access for Hands-On Exercises

Currently, we do not provide access to training servers. In 2016-2017, registrants received remote access to a cluster of training servers. The compute nodes in the cluster are based on Intel Xeon Phi x200 family processors (formerly KNL), and additional nodes with Intel Xeon processors and Intel Xeon Phi coprocessors (KNC) are available. Intel software development tools are provided in the cluster under the Evaluation license.

Slides, Code and Video:

Code

Practical exercises in this training were originally published as supplementary code for Programming and Optimization for Intel Xeon Phi Coprocessors. Since then, we have re-released them under the MIT license and posted on GitHub with the latest updates.

Practical exercises in this training were originally published as supplementary code for Programming and Optimization for Intel Xeon Phi Coprocessors. Since then, we have re-released them under the MIT license and posted on GitHub with the latest updates.

You can download the labs as a ZIP archive or clone from GitHub:

git clone https://github.com/ColfaxResearch/HOW-Series-Labs.gitVideo

Session 01

Intel Architecture and Modern Code

We introduce Intel Xeon and Intel Xeon Phi processors and discuss their features and purpose. We also begin an introduction to portable, future-proof parallel programming: thread parallelism, vectorization, and optimized memory access pattern. The hands-on part introduces Intel compilers and additional software for efficient solution of computational problems. The hands-on part illustrates the usage of the Colfax Cluster for programming exercises presented in the course.

Slides: ![]() Colfax_HOW_Series_01.pdf (13 MB)

Colfax_HOW_Series_01.pdf (13 MB)

Session 02

Xeon Phi, Coprocessors, Omni-Path

We talk about high-bandwidth memory (MCDRAM) in Intel Xeon Phi processors and demonstrate programming techniques for using it. We also discuss the coprocessor form-factor of the Intel Xeon Phi platform, learning to use the native and the explicit offload programming models. The session introduces the high-bandwidth interconnects based on the Intel Omni-Path Architecture, and discusses its application in heterogeneous programming with offload over fabric.

Slides: ![]() Colfax_HOW_Series_02.pdf (4 MB)

Colfax_HOW_Series_02.pdf (4 MB)

Session 03

Expressing Parallelism with Vectors

This session introduces data parallelism and automatic vectorization. Topics include: the concept of SIMD operations, history and future of vector instructions in Intel Architecture, including AVX-512, using intrinsics to vectorize code, automatic vectorization with Intel compilers for loops, expressions with array notations and SIMD-enabled functions. The hands-on part focuses on using the Intel compiler to perform automatic vectorization, diagnose its success, and making automatic vectorization happen when the compiler does not see opportunities for data parallelism.

Slides: ![]() Colfax_HOW_Series_03.pdf (3 MB)

Colfax_HOW_Series_03.pdf (3 MB)

Session 04

Multi-threading with OpenMP

Crash-course on thread parallelism and the OpenMP framework. We will talk about using threads to utilize multiple processor cores, coordination of thread and data parallelism, using OpenMP to create threads and team them up to process loops and trees of tasks. You will learn to control the number of threads, variable sharing, loop scheduling modes, to use mutexes to protect race conditions, and implementing parallel reduction. The hands-on part demonstrates using OpenMP to parallelize serial computation and shows for-loops, variable sharing, mutexes and parallel reduction on an example application performing numerical integration.

Slides: ![]() Colfax_HOW_Series_04.pdf (3 MB)

Colfax_HOW_Series_04.pdf (3 MB)

Session 05

Distributed Computing, MPI

The last session of the week is an introduction to distributed computing with the Message Passing Interface (MPI) framework. We illustrate the usage of MPI on standard processors as well as Intel Xeon Phi processors and heterogeneous systems with coprocessors. Inter-operation between Intel MPI and OpenMP is illustrated.

Slides: ![]() Colfax_HOW_Series_05.pdf (6 MB)

Colfax_HOW_Series_05.pdf (6 MB)

Session 06

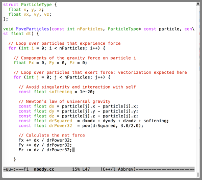

Optimization Overview: N-body

Here we begin the discussion of performance optimization. This episode lays out the optimization roadmap that classifies optimization techniques into five categories. The lecture part of demonstrates the application of some of the techniques from these 5 categories to an example application implementing the direct N-body simulation. The hands-on part of the episode demonstrates the optimization process for the N-body simulation and measures performance gains obtained on an Intel Xeon E5 processor and their scalability to an Intel Xeon Phi coprocessor (KNC) and processor (KNL).

Slides: ![]() Colfax_HOW_Series_06.pdf (3 MB)

Colfax_HOW_Series_06.pdf (3 MB)

Session 07

Scalar Tuning, Vectorization

We start going into the details of performance tuning. The session will cover essential compiler arguments, control of precision and accuracy, unit-stride memory access, data alignment, padding and compiler hints, and the usage of strip-mining for vectorization. The hands-on part will illustrate these techniques in the implementation of LU decomposition of small matrices. We will begin demonstrating strip-mining and AVX-512CD in the computation of binning.

Slides: ![]() Colfax_HOW_Series_07.pdf (2 MB)

Colfax_HOW_Series_07.pdf (2 MB)

Session 08

Common Multi-threading Problems

This session talks about common problems in the optimization of multi-threaded applications. We re-visit OpenMP and the binning example from Session 6 to implement multi-threading in that code. The process takes us to the discussion of race conditions, mutexes, efficient parallel reduction with thread-private variables. We also encounter false sharing and demonstrate how it can be eliminated. The second example discussed in this episode represents stencil operations, and the discussion of this example deals with the problem of insufficient parallelism and demonstrates how to move parallelism from vectors to cores using strip-mining and loop collapse.

Slides: ![]() Colfax_HOW_Series_08.pdf (3 MB)

Colfax_HOW_Series_08.pdf (3 MB)

Session 09

Multi-Threading, Memory Aspect

We continue talking about optimization of multi-threading, this time from the memory access point of view. The material includes principles and methods of affinity control and best practices for NUMA architecture, which includes two-way and four-way Xeon processors and Intel Xeon Phi processors in SNC-2/SNC-4 clustering modes. The hands-on part of the episode demonstrates the tuning of matrix multiplication and array copying on a standard processor as well as an Intel Xeon Phi processor and coprocessor.

Slides: ![]() Colfax_HOW_Series_09.pdf (4 MB)

Colfax_HOW_Series_09.pdf (4 MB)

Session 10

Access to Caches and Memory

Memory traffic optimization: how to optimize the utilization of caches, and how to optimally access the main memory. We discuss the requirement of data access locality in space and time and demonstrate techniques for achieving it: unit-stride access, loop fusion, loop tiling and cache-oblivious recursion. We also talk about optimizing memory bandwidth, focusing on the MCDRAM in Intel Xeon Phi processors. The hands-on part demonstrates the application of the discussed methods to the matrix-vector multiplication code.

Slides: ![]() Colfax_HOW_Series_10.pdf (4 MB)

Colfax_HOW_Series_10.pdf (4 MB)

Supplementary Materials

The course is based on Colfax’s book “Parallel Programming and Optimization with Intel Xeon Phi Coprocessors“, second edition. All topics discussed in the training are covered in the book. Practical exercises used in the code come with the paper and with the electronic edition. The book is an optional guide for the HOW series training. Members of Colfax Research can get a discount.