Test-driving Intel® Xeon Phi™ coprocessors with a basic N-body simulation

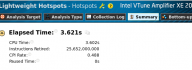

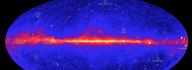

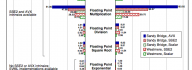

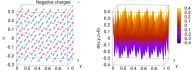

Intel® Xeon Phi™ coprocessors are capable of delivering more performance and better energy efficiency than Intel® Xeon® processors for certain parallel applications. In this paper, we investigate the porting and optimization of a test problem for the Intel Xeon Phi coprocessor. The test problem is a basic N-body simulation, which is the foundation of a number of applications in computational astrophysics and biophysics. Using common code in the C language for the host processor and for the coprocessor, we benchmark the N-body simulation. The simulation runs 2.3x to 5.4x times faster on a single Intel Xeon Phi coprocessor than on two Intel Xeon E5 series processors. The performance depends on the accuracy settings for transcendental arithmetics. We also study the assembly code produced by the compiler from the C code. This allows us to pinpoint some strategies for designing C/C++ programs that result in efficient automatically vectorized applications for Intel Xeon family devices. The visualization shown below demonstrates the results and the performance of the [...]