Cache Traffic Optimization on Intel Xeon Phi Coprocessors for Parallel In-Place Square Matrix Transposition with Intel Cilk Plus and OpenMP

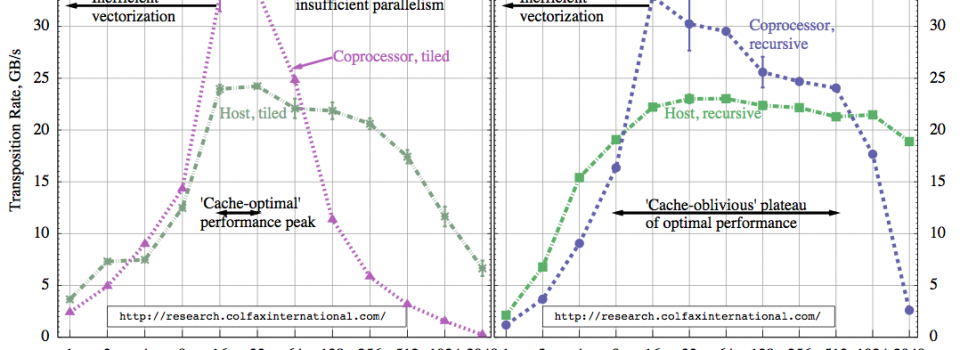

Numerical algorithms sensitive to the performance of processor caches can be optimized by increasing the locality of data access. Loop tiling and recursive divide-and-conquer are common methods for cache traffic optimization. This paper studies the applicability of these optimization methods in the Intel Xeon Phi architecture for the in-place square matrix transposition operation. Optimized implementations in the Intel Cilk Plus and OpenMP frameworks are presented and benchmarked. Cache-oblivious nature of the recursive algorithm is compared to the tunable character of the tiled method. Results show that Intel Xeon Phi coprocessors transpose large matrices faster than the host system, however, smaller matrices are more efficiently transposed by the host. On the coprocessor, the Intel Cilk Plus framework excels for large matrix sizes, but incurs a significant parallelization overhead for smaller sizes. Transposition of smaller matrices on the coprocessor is faster with OpenMP.

COMMENTS:

- If you are interested in this paper, make sure to also read a follow-up publication (improved results, downloadable public code) available at http://colfaxresearch.com/?p=27

- Note that in the present paper, the rate of transposition in GB/s is calculated as the matrix size divided by the transposition time. In the follow-up paper and most other publications on the subject, this ratio is further multiplied by 2x. Multiply the transposition rate reported in this paper by 2x in order to compare it to the follow-up results.

Complete paper: ![]() Colfax_Transposition_Xeon_Phi.pdf (602 KB)

Colfax_Transposition_Xeon_Phi.pdf (602 KB)

Follow-up publication: http://colfaxresearch.com/?p=27