Optimization Techniques for the Intel MIC Architecture. Part 1 of 3: Multi-Threading and Parallel Reduction

This is part 1 of a 3-part educational series of publications introducing select topics on optimization of applications for the Intel multi-core and manycore architectures (Intel Xeon processors and Intel Xeon Phi coprocessors).

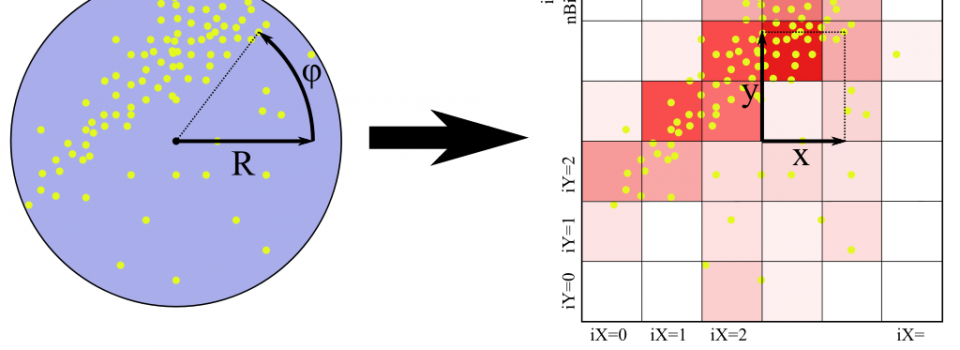

In this paper we focus on thread parallelism and race conditions. We discuss the usage of mutexes in OpenMP to resolve race conditions. We also show how to implement efficient parallel reduction using thread-private storage and mutexes. For a practical illustration, we construct and optimize a micro-kernel for binning particles based on their coordinates. Workloads like this one occur in such applications as Monte Carlo simulations, particle physics software, and statistical analysis.

The optimization technique discussed in this paper leads to a performance increase of 25x on a 24-core CPU and up to 100x on the MIC architecture compared to a single-threaded implementation on the same architectures.

In the next publication of this series, we will demonstrate further optimization of this workload, focusing on vectorization.

See also:

- Part 1: Multi-Threading and Parallel Reduction

- Part 2: Strip-Mining for Vectorization

- Part 3: False Sharing and Padding

Complete paper: ![]() Colfax_Optimization_Techniques_1_of_3.pdf (370 KB)

Colfax_Optimization_Techniques_1_of_3.pdf (370 KB)

Source code for Linux: Colfax_Tutorial_Binning.zip (6 KB)

Or read online at TechEnablement

Part 2 of 3 is also published and can be found here: http://colfaxresearch.com/?p=709

Hi,

Thanks for the article. The label for Figure 3 appears to be the same as that for Figure 2, please check and correct.

I was happy with my code (runnig a bit faster on Phi than on cpu) until I looked at your videos and realized something’s wrong with my vectorization 🙂 Anyway, thanks for good materials!